Stream Processing Trends Every Data Leader Should Know

Community Contribution

Community ContributionTable of contents

- Key Takeaways

- 2025 Stream Processing Landscape

- Batch & Stream Convergence

- AI Integration in Stream Processing

- Data Lakehouse & Data Fabric

- Real-Time Analytics & Monetization

- Data Streaming Platforms & Ecosystem

- Developer Experience in Stream Processing

- Edge Computing & Real-Time Data

- Security, Governance & Challenges

- FAQ

- What is stream processing?

- How does stream processing differ from batch processing?

- Why do businesses invest in real-time analytics?

- Which industries benefit most from stream processing?

- What challenges do organizations face with stream processing adoption?

- How does AI enhance stream processing?

- What security measures protect real-time data streams?

Stream processing drives critical decisions for leading organizations in 2025. Companies deploy real-time analytics to power everything from fraud detection in finance to predictive maintenance in manufacturing. North America leads the market, with large enterprises using real-time data and artificial intelligence to optimize operations and deliver hyper-personalized experiences. Data leaders see trends like event-driven architectures and adaptive analytics transforming healthcare, retail, and logistics. Real-time processing enables instant insights, fueling innovation and measurable ROI. This blog shares actionable strategies and industry examples to help data leaders unlock new value through stream processing.

Manufacturing reduces downtime with sensor data and predictive analytics.

Finance enhances security using behavioral analytics and real-time data streams.

Healthcare improves clinical decisions with dynamic analytics models.

Key Takeaways

Real-time data processing enables instant decisions that improve customer experiences, reduce costs, and boost operational efficiency across industries.

Unified architectures combine batch and stream processing, simplifying data workflows and increasing flexibility for diverse analytics needs.

AI-powered stream processing delivers predictive and prescriptive insights, helping organizations anticipate trends and automate smart actions.

Zero-ETL and simplified pipelines reduce complexity, speed up data availability, and lower engineering effort for faster insights.

Scalable data lakehouse platforms and seamless integration support growing real-time data volumes while maintaining data accuracy and governance.

Stream processing platforms like Apache Kafka and Flink provide reliable, low-latency data handling essential for modern business applications.

Edge computing processes data near its source, cutting latency and enhancing privacy for critical use cases like autonomous vehicles and healthcare.

Strong security, governance, and compliance practices protect sensitive real-time data and build trust with customers and partners.

2025 Stream Processing Landscape

Why Real-Time Data Matters

The data streaming landscape in 2025 and beyond features rapid advancements in stream processing systems, real-time analytics, and unified architectures. Organizations rely on real-time data streams to drive immediate decisions and maintain competitiveness. Real-time processing eliminates delays, allowing companies to respond instantly to market shifts and operational changes. Real-time data processing supports evolving use cases such as fraud detection, predictive maintenance, and dynamic pricing.

Speed and efficiency define the value of real-time analytics. Companies use real-time data to personalize customer experiences, optimize supply chains, and prevent equipment failures. For example, e-commerce platforms recommend products in real time, boosting sales and customer satisfaction. Logistics firms adjust delivery routes using live traffic data, reducing delays and improving service quality.

| Industry | Real-Time Data Application | Benefits for Decision-Making in 2025 |

| Finance | Fraud detection, market monitoring, trader support | Enables instant risk mitigation and informed investment decisions |

| Healthcare | Patient vital sign monitoring, AI trend analysis | Allows timely interventions and predictive health management |

| E-Commerce | Consumer behavior tracking, inventory management | Supports dynamic marketing and supply chain adjustments |

| Manufacturing | Equipment maintenance alerts | Prevents breakdowns through proactive maintenance scheduling |

| Supply Chain | Shipment tracking and delay management | Enhances operational efficiency and customer communication |

Real-time data streams empower organizations to make faster, more accurate, and proactive decisions. The data streaming landscape continues to evolve, with stream processing systems at the core of business transformation.

Drivers of Change

Several market trends accelerate the adoption of stream processing systems and data streaming technologies. The proliferation of IoT devices generates continuous event streaming, making real-time stream processing essential for smart homes, industrial automation, and healthcare wearables. Enterprises shift from traditional platforms to cloud-native streaming solutions, enhancing scalability and accessibility.

74% of IT leaders report that streaming data enhances customer experiences.

73% say it enables faster decision-making.

Enterprise-ready streaming solutions such as Google Dataflow, RedPanda, Confluent, and DeltaStream reduce complexity and operational overhead.

Use cases include dynamic pricing, personalized recommendations, real-time ad bidding, and in-game activity tailoring.

The landscape features integration of stream processing systems with data lake technologies like Apache Iceberg, enabling unified data ingestion and incremental computation. AI-driven features, including real-time AI and large language model integration, enhance analytics capabilities. Architectural shifts toward storage-compute separation using S3 as a primary storage layer improve scalability and cost efficiency. Stream processing systems now consolidate data ingestion, stateful processing, and serving layers into unified platforms, simplifying architecture and reducing latency.

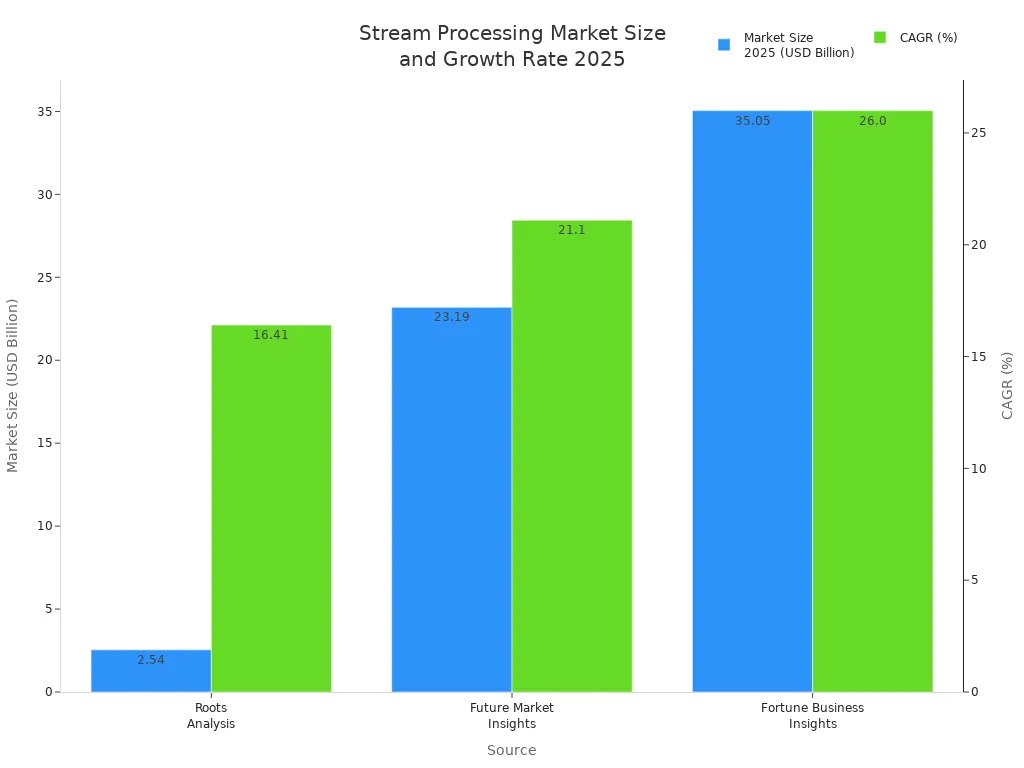

| Source | Market Segment | Market Size in 2025 (USD Billion) | CAGR (%) |

| Roots Analysis | Event Stream Processing | 2.54 | 16.41 (till 2035) |

| Future Market Insights | Streaming Analytics | 23.19 | 21.1 (2025-2035) |

| Fortune Business Insights | Streaming Analytics | 35.05 | 26.0 (2025-2032) |

The data streaming landscape grows rapidly, fueled by demand for real-time data processing, AI-driven insights, IoT analytics, and integration with cloud and edge computing technologies.

Business Impact

Stream processing systems deliver measurable business impact across industries. Adobe uses AI-driven real-time personalization to tailor marketing content, increasing conversion rates and marketing ROI. Patagonia applies analytics to optimize its supply chain for sustainability, reducing emissions and strengthening customer loyalty. Siemens integrates IoT sensors and AI analytics in manufacturing, enhancing energy efficiency and lowering operational costs.

| Industry | Organization | Data Analytics Strategy/Implementation | Key Business Impact/Outcome |

| Retail | Walmart | Predictive analytics integrating sales, weather, and consumer behavior | Reduced excess inventory and minimized stockouts |

| Healthcare | Johns Hopkins Hospital | Predictive modeling on 200+ electronic health record variables | 10% reduction in patient readmissions, cost savings, personalized care |

| Financial Services | Multiple Banks | Machine learning for credit scoring, fraud detection, personalized services | Enhanced risk assessment, real-time fraud prevention, tailored services |

Stream processing systems enable organizations to reduce operational costs, improve customer experiences, and drive innovation. The data streaming landscape in 2025 and beyond supports scalable, AI-enabled, and architecturally simplified solutions for real-time, data-intensive applications. Data leaders who embrace these data streaming trends position their organizations for sustained growth and competitive advantage.

Batch & Stream Convergence

Unified Architectures

Organizations in 2025 demand seamless integration between batch and streaming workloads. Modern unified architectures now leverage advanced lake storage layers such as Apache Paimon, which supports both streaming and batch reads and writes. These systems offer ACID transactions, time travel, and schema evolution, making them ideal for scalable and consistent data processing. Apache Flink 1.20 introduced the Materialized Table feature, allowing the engine to automatically determine whether a job is batch, incremental, or streaming. This innovation simplifies development and enables efficient data ingestion for diverse use cases.

| Component | Example(s) | Description |

| Compute Engine | Apache Flink | Treats batch processing as a special case of stream processing; supports unified codebase for batch and streaming jobs; native streaming with strong semantics and performance improvements. |

| Apache Spark | Early unified engine using micro-batching to emulate streaming; limited real-time semantics; continuous processing still experimental. | |

| Storage Format | Apache Paimon | Lake format designed for unified batch and stream processing; supports streaming and batch reads/writes; integrates with Flink and Spark; low latency streaming lake storage. |

| Apache Hudi | Supports multiple engines; enables batch and streaming reads/writes; integrated with Flink for end-to-end latencies around ten minutes. | |

| Apache Iceberg | Supports batch and streaming writes with Flink APIs; recommended for hourly data updates; integrated with Flink since 2020. |

Unified architectures empower organizations to process data ingestion and analytics workloads with greater flexibility, reliability, and speed.

Zero-ETL Approaches

Zero-ETL approaches are transforming how organizations manage streaming and batch data. These methods eliminate the need for traditional ETL pipelines, allowing direct data loading into warehouses or lakes without upfront transformation. Companies experience infrastructure cost reduction by removing staging and transformation servers. Operational efficiency improves as maintenance becomes simpler and less specialized expertise is required. Time-to-value metrics see dramatic improvements, enabling faster data availability and decision-making.

Zero-ETL enables direct data loading, reducing preparation time.

Schema-on-read increases flexibility and agility.

Transformations occur on-demand, accelerating insights.

Supports incremental updates for real-time analytics.

Automation and orchestration allow real-time data transformation, improving integration efficiency.

Reduces latency, enabling AI systems to access live data for use cases like fraud detection and product recommendations.

Removes complex ETL pipelines, lowering engineering effort and operational complexity.

Supports scalable architectures by removing brittle pipelines.

Avoids data replication, reducing data sprawl and supporting compliance.

Zero-ETL approaches complement traditional ETL, especially for complex transformations and data quality checks. Organizations benefit from improved customer response, reduced operational issues, and enhanced competitive positioning.

Simplified Pipelines

Simplified pipelines play a crucial role in stream processing systems by improving speed and reliability. Well-designed pipelines support scalability, enabling efficient processing of large data volumes. Features such as monitoring, rerun capabilities, and checkpointing allow error detection and recovery, enhancing reliability. Streamlined pipelines facilitate faster data movement and transformation, supporting rapid decision-making.

Robust pipelines improve data quality, reliability, and consistency through data cleaning and validation steps.

Higher quality data supports more accurate insights and evidence-based decisions.

Pipelines integrate data from multiple sources, enabling unified views and easier cross-referencing.

By transforming only necessary data, pipelines streamline processing and support faster consumption.

A standardized analytics pipeline enabled rapid development and validation of prediction models for COVID-19 mortality across international databases. The study completed within weeks, demonstrating that reliable and transparent pipelines support fast yet robust data processing. External validation showed consistent model performance, indicating improved reliability. Open-source tools and standardized approaches facilitated reproducibility and minimized bias, proving that simplified pipelines enhance both speed and reliability in stream processing systems.

AI Integration in Stream Processing

Predictive & Prescriptive Analytics

Organizations now harness stream processing to deliver predictive and prescriptive analytics at scale. Predictive analytics leverages both historical and real-time data to forecast trends and behaviors, enabling teams to anticipate changes before they occur. Prescriptive analytics builds on these forecasts by recommending optimal actions in real time. This approach empowers companies to optimize resources, manage risks, and personalize customer experiences.

Predictive analytics identifies patterns and signals in live data streams, supporting use cases such as fraud detection and dynamic pricing.

Prescriptive analytics answers, “What should we do next?” by combining forecasting with optimization, simulations, and business rules.

Real-time analytics platforms, including Apache Kafka and Apache Storm, enable continuous data stream processing for immediate insights.

For example, a retailer can recommend pricing strategies based on current demand, while a logistics provider can optimize delivery routes by analyzing live traffic data. These capabilities allow organizations to automate decision-making, enhance efficiency, and maintain a strategic advantage.

Prescriptive analytics enables dynamic adaptation to evolving data streams, ensuring that organizations remain agile and responsive in fast-changing environments.

Continuous Learning Models

Continuous learning models represent a significant advancement in ai-powered stream processing. These models adapt to new data as it arrives, updating their predictions and recommendations without manual intervention. This capability ensures that analytics remain accurate and relevant, even as market conditions shift.

Continuous learning supports applications where data patterns evolve rapidly, such as financial trading or personalized marketing.

Models ingest streaming data, retrain on the fly, and deploy updated versions in production environments.

This approach reduces model drift and maintains high performance over time.

Artificial intelligence now plays a central role in enabling these adaptive systems. By integrating continuous learning into stream processing, organizations can respond to emerging trends and anomalies with unprecedented speed.

Real-Time AI Inference

Real-time AI inference transforms how businesses extract value from data streams. Stream processing systems must deliver low-latency, high-throughput inference to support interactive and safety-critical applications. Performance benchmarks for real-time AI inference focus on several key metrics:

| Benchmark Category | Key Metrics & Considerations | Relevance to Real-Time AI Inference |

| Latency & Tail Latency | Mean, p95, p99 latency | Ensures responsiveness in robotics and autonomous driving |

| Throughput & Batch Efficiency | Queries per second, batch efficiency | Balances low-latency and high-throughput requirements |

| Numerical Precision Trade-offs | FP32, FP16, INT8 accuracy vs. speed | Optimizes speed and power while managing accuracy |

| Memory Footprint | Model size, RAM usage | Critical for edge and mobile deployments |

| Cold-Start & Model Load Time | Model load and first inference latency | Important for serverless and on-demand scenarios |

| Scalability | Performance under load, multi-model serving | Measures robustness in cloud and edge environments |

| Power & Energy Efficiency | Power consumption, QPS/Watt | Essential for sustainable large-scale deployments |

These benchmarks, standardized by suites like MLPerf Inference, help organizations balance latency, throughput, accuracy, and energy efficiency. Stream processing platforms that meet these criteria enable real-time AI applications in sectors such as healthcare, manufacturing, and autonomous systems.

Data Lakehouse & Data Fabric

Scalable Real-Time Data Streams

Modern organizations rely on scalable architectures to manage the explosive growth of real-time data streams. Data lakehouse platforms such as Microsoft Fabric support streaming ingestion from sources like Event Hubs, IoT Hubs, and Kafka, enabling near real-time data capture. The Real-Time Analytics store, designed for raw telemetry, emphasizes fast ingestion and query performance. OneLake provides a unified storage layer, allowing users to query data without physical duplication, which improves scalability and reduces operational overhead.

Key scalability features include:

Streaming ingestion pipelines with over 200 native connectors for flexible data movement.

Data retention policies and archiving strategies that prevent uncontrolled data growth and cost overruns.

Multi-cloud storage support with Delta Lake format, ACID transactions, and schema evolution for consistent data management.

Query optimizations such as indexing, caching, and predicate pushdown to reduce latency and improve throughput.

Kafka and Apache Flink integration enables scalable, low-latency pipelines capable of handling gigabytes per second. These platforms support event time processing, state management, and replay of historical data, making them suitable for operational real-time use cases. Organizations measure scalability using throughput, latency, and retention metrics, ensuring that their data lakehouse can support both analytical and operational workloads.

Seamless Integration

Seamless integration stands at the core of the streaming data fabric approach. Every lakehouse in Microsoft Fabric automatically includes a SQL analytics endpoint, which acts as a lightweight data warehousing layer. This endpoint enables high-performance, low-latency SQL queries across both lakehouse and warehouse data. Automatic metadata discovery keeps SQL metadata current, supporting real-time data availability without manual intervention.

Integration features include:

Incremental loading pipelines that process only new or updated files, reducing computing load and ensuring data freshness.

Direct Query connections that allow users to access current data without duplication, facilitating real-time analytics.

Real-time synchronization between sources, maintaining data integrity and preventing discrepancies.

Unified logical data lake storage, centralizing data and enabling cross-database queries.

This architecture simplifies traditional batch and streaming patterns, supporting additive analytics scenarios with immediate access to consolidated datasets. Built-in governance and security features ensure compliance while breaking down data silos.

Seamless integration enables organizations to maintain a single source of truth, improve reporting accuracy, and simplify data management.

Unified Analytics

Unified analytics platforms address critical challenges in modern data management. They handle diverse data formats and sources, manage both fast and slow data streams, and process large volumes efficiently. Centralized infrastructure reduces duplication and maintains a single source of truth, which enhances operational efficiency and lowers costs.

| Challenge | Unified Analytics Solution | Business Value |

| Variety | Supports structured and unstructured data | Broader insights, flexible analysis |

| Velocity | Real-time and batch processing | Timely decisions, agile response |

| Volume | Scalable storage and compute | Handles growth, bursty workloads |

| Visibility | Data lineage and version control | Trustworthy analysis, compliance |

| Veracity | Data accuracy and integrity | Confident decision-making |

| Vulnerability | Security and governance | Protects data, ensures compliance |

| Value | Actionable insights, product innovation | Competitive advantage, ROI |

Unified Data Analytics Platforms (UDAPs) unify fragmented workflows, simplify governance, and improve accessibility for technical and non-technical users. These platforms enable instant access to insights, supporting agile decision-making in fast-changing markets. Real-world applications show measurable business value in manufacturing, financial services, retail, and healthcare. The scalability and open architecture of unified analytics future-proof data strategies, aligning technology investments with long-term objectives.

Real-Time Analytics & Monetization

Revenue from Real-Time Data

Organizations in 2025 unlock new revenue streams by leveraging real-time analytics. They build and sell data products, such as dashboards, reports, and predictive models, to external clients. Many companies use real-time processing to improve internal decision-making, reduce customer churn, and personalize marketing efforts. These strategies increase sales and boost customer lifetime value.

| Monetization Strategy | Description | Example / Notes |

| Build and Sell Data Products | Create dashboards, reports, or predictive models for sale or licensing. | SaaS vendors package anonymized usage data into trend reports, monetized via subscriptions or fees. |

| Use Data Internally to Drive Profit | Optimize decisions, reduce churn, and personalize marketing using analytics. | E-commerce platforms send personalized offers based on browsing data, increasing sales. |

| Offer Data-as-a-Service (DaaS) | Provide real-time data access via APIs or marketplaces to partners. | Logistics firms share traffic data with other transport services, generating recurring revenue. |

| Create Strategic Data Partnerships | Exchange insights with trusted partners for mutual benefit. | Airlines share passenger demand data with hotels, enabling co-created products. |

| Embed Data in AI-Powered Tools | Integrate data into AI tools for intelligent features. | CRM platforms offer AI-driven sales forecasting, monetized via licensing or pay-per-use. |

| Monetize Engagement Data | Personalize content and improve retention using user behavior data. | Media platforms recommend content based on watch history, increasing engagement. |

| License or Sell Anonymized Aggregate Data | License large datasets to third parties, ensuring privacy compliance. | Fintech firms sell anonymized behavioral data to research organizations. |

Companies often adopt a phased monetization approach. They onboard customers with analytics features, gather feedback, and optimize offerings. After launching analytics to MVP clients, they expand deployment to the full user base, often with self-service options. Freemium models attract users with basic analytics, then monetize advanced features like predictive modeling.

Data Products & Marketplaces

Real-time analytics platforms enable the creation of innovative data products and marketplaces. FanDuel, a leading sports betting platform, uses real-time data to optimize marketing campaigns, personalize user journeys, and detect fraud. The Hotels Network leverages clickstream data to deliver personalized booking offers and competitive benchmarking, improving conversion rates for hoteliers.

Developers use platforms like Tinybird to integrate data streaming, real-time databases, and API layers. These tools help build and maintain real-time data products efficiently. Data marketplaces allow organizations to offer Data-as-a-Service, providing external partners with access to valuable real-time data streams. Strategic partnerships and embedded analytics add value for both providers and consumers in the market.

Data products built on real-time analytics drive innovation, improve customer experiences, and create new business models.

Privacy & Compliance

Privacy and compliance present significant challenges for organizations monetizing real-time data. Risks include data breaches, reputational damage, and legal penalties. Companies must implement privacy-enhancing technologies such as anonymization, tokenization, and homomorphic encryption. Zero-trust security architectures and regular audits help protect sensitive information.

Stricter regulations like GDPR, CCPA, and HIPAA impose heavy fines for non-compliance. Organizations face technical challenges integrating legacy systems with modern monetization strategies. Consent-based models ensure customers control their data, supporting regulatory compliance. Alternative ID frameworks, such as UID2 and RampID, enable privacy-compliant monetization, especially in advertising.

Many organizations fear reputational damage and loss of business partnerships more than regulatory fines. General Motors OnStar faced backlash for selling driving data without transparency, while ADP successfully monetizes anonymized payroll data with full compliance and customer trust. Companies must update contracts and implement safeguards to prevent unauthorized data transfers, especially with new export controls.

Success in real-time data monetization depends on prioritizing compliance, cybersecurity, and data integrity. Agile strategies and continuous review help organizations adapt to evolving regulations and market demands.

Data Streaming Platforms & Ecosystem

Apache Kafka & Alternatives

Organizations rely on Apache Kafka as a foundational technology for event streaming and real-time analytics. Kafka supports high-throughput, fault-tolerant data streaming, making it a preferred choice for many stream processing systems. Companies use Kafka to ingest, buffer, and distribute millions of events per second across diverse platforms. Kafka’s ecosystem includes connectors, schema registries, and stream processing engines, which simplify integration with existing infrastructure.

Alternatives to Kafka have emerged to address specific needs. Redpanda offers a Kafka-compatible API with lower latency and simplified operations. Pulsar provides multi-tenancy and geo-replication, which benefit global enterprises. Kinesis and Azure Event Hubs deliver fully managed cloud services, reducing operational overhead for teams. These platforms enable seamless event streaming and support a wide range of streaming use cases, from IoT telemetry to financial transactions.

Data streaming platforms must deliver reliability, scalability, and flexibility to support modern business requirements.

| Platform | Key Features | Best Use Cases |

| Apache Kafka | High throughput, durability | Real-time analytics, event streaming |

| Redpanda | Low latency, Kafka API | Performance-critical workloads |

| Apache Pulsar | Multi-tenancy, geo-replication | Global data streaming |

| AWS Kinesis | Managed service, easy scaling | Cloud-native streaming |

| Azure Event Hubs | Integration, managed service | Enterprise cloud streaming |

Apache Flink Adoption

Apache Flink has become a leading stream processing engine for organizations seeking advanced real-time analytics. Flink’s architecture supports true stream processing, enabling continuous, low-latency data handling. Companies benefit from stateful stream processing, which maintains state across events for complex operations. Event-time processing ensures accurate handling of delayed or out-of-order data, which is critical for financial and IoT applications.

Flink 2.0 introduces disaggregated state management, improving resource efficiency in cloud-native environments. Materialized tables abstract stream and batch complexities, simplifying development. Deep integration with Apache Paimon strengthens streaming lakehouse architectures, making Flink ideal for AI-driven workflows. Flink’s unified APIs support both batch and streaming, providing flexibility for diverse analytics needs.

Organizations choose Flink for several reasons:

Continuous, low-latency stream processing.

Stateful operations for complex analytics.

Accurate event-time processing.

High scalability and fault tolerance.

Unified APIs for batch and streaming.

Integration with machine learning pipelines.

Support for multiple programming languages.

Seamless integration with big data platforms.

Innovations in Flink 2.0 for performance and efficiency. 10. Ability to process massive data volumes with operational efficiency.

Flink’s innovations position it as a top choice for stream processing systems in AI-driven, real-time environments.

BYOC & Serverless Models

The architecture of data streaming platforms has shifted toward serverless and multi-tenant models. BYOC (Bring Your Own Cloud) allows organizations to deploy single-tenant, on-premise-like systems in their own cloud accounts. This approach often leads to scaling challenges, operational overhead, and fragmented costs. BYOC pricing may appear lower initially, but customers bear additional infrastructure, security, and networking expenses.

Serverless and large-scale multi-tenant architectures leverage elastic cloud resources, enabling better scalability and reliability. These platforms charge based on actual consumption, allowing automatic scaling and resource pooling. Serverless models include infrastructure, security, and support costs in unified billing, improving total cost of ownership. Examples include AWS serverless EMR, Redshift, Aurora, Snowflake, MongoDB, Confluent, and Databricks.

Organizations benefit from serverless models in several ways:

Elasticity and resource pooling handle bursty workloads efficiently.

Higher reliability due to excess capacity for failures.

Simpler management and improved security maturity.

Better customer experience and faster innovation.

The industry trend favors serverless, multi-tenant SaaS offerings for superior scalability, cost-effectiveness, and operational efficiency. BYOC may suit early-stage startups or compliance-driven use cases, but lacks the economies of scale and elasticity needed for long-term success.

Serverless models empower organizations to scale data streaming platforms effortlessly, optimize costs, and focus on innovation.

Developer Experience in Stream Processing

Low-Code & No-Code Tools

Developers now benefit from low-code and no-code platforms that simplify streaming application development. These tools allow users to design, deploy, and monitor data streaming pipelines with drag-and-drop interfaces and pre-built connectors. Teams can create real-time dashboards, automate alerts, and integrate with cloud services without writing extensive code. This approach reduces development time and lowers the barrier for entry, enabling business analysts and citizen developers to participate in streaming projects.

Popular platforms offer visual workflow builders, schema mapping, and built-in connectors for sources like Kafka, AWS Kinesis, and Azure Event Hubs. Users can configure streaming jobs, set up transformations, and monitor pipeline health through intuitive dashboards. These tools support rapid prototyping and iterative development, making it easier to adapt to changing business requirements. Organizations see faster deployment cycles and improved collaboration between technical and non-technical teams.

Low-code and no-code solutions democratize access to data streaming, allowing more stakeholders to contribute to innovation.

Autonomous System Building

Autonomous systems are transforming the way organizations approach streaming and data streaming. AI-driven platforms now leverage machine learning, computer vision, and real-time data processing to enable decision-making with minimal human intervention. Industries such as automotive, robotics, and healthcare deploy autonomous systems for tasks like self-driving navigation, predictive maintenance, and real-time patient monitoring.

Recent advancements include real-time data processing technologies, in-memory databases, and distributed computing systems that evaluate and respond to continuous data streams instantly. AI integration enhances predictive analytics and autonomous navigation by analyzing streaming data in real time. Edge computing processes data closer to the source, reducing latency and improving security. Developers build scalable and resilient infrastructures to handle high-load and unpredictable scenarios.

Key strategies for successful autonomous system deployment involve starting with well-defined use cases, building trust through transparency, and maintaining human oversight. Modular architectures and dedicated integration teams address challenges like legacy compatibility and API governance. Security-by-design principles, continuous vulnerability assessments, and robust authentication protect against expanded attack surfaces. Emerging technologies such as quantum computing and federated learning promise faster optimization and enhanced security. Ethical frameworks and governance standards guide responsible development and future-proofing.

Real-time navigation and collision avoidance in autonomous vehicles

Smart manufacturing and predictive maintenance in industrial automation

Automated diagnostics and patient monitoring in healthcare

Autonomous system building in streaming environments requires a balance of innovation, reliability, and ethical governance.

Open Source & Skills

Open source projects drive innovation in streaming and data streaming. Developers contribute to platforms like Apache Kafka, Apache Flink, and Spark Streaming, building scalable and reliable data streaming pipelines. The demand for skilled professionals continues to rise, with organizations seeking expertise in programming languages such as Python, Java, and Scala. Strong SQL skills, especially for analytical queries and distributed engines, remain essential.

Teams value experience with big data frameworks, cloud data platforms, and orchestration tools like Airflow and Prefect. Knowledge of event-driven architecture, distributed systems, and fault tolerance is critical for building robust streaming solutions. Developers must understand data modeling, warehousing, and security best practices. Hands-on experience with monitoring, alerting, and governance ensures operational excellence.

Soft skills such as problem-solving, collaboration, and communication help teams navigate complex streaming projects. Adaptability to evolving technologies and tools supports continuous learning and professional growth. Real-world project experience and open-source contributions demonstrate practical skills and commitment to the streaming community.

| Skill Area | Description | Example Tools / Technologies |

| Programming Languages | Python, Java, Scala | Apache Kafka, Flink, Spark Streaming |

| SQL & Analytical Queries | Distributed SQL engines, query optimization | Hive, Presto, Trino |

| Cloud Data Platforms | AWS, Azure, Google Cloud | Redshift, Dataflow, Synapse |

| Orchestration & Monitoring | Workflow automation, pipeline health checks | Airflow, Prefect |

| Security & Governance | Data privacy, authentication, compliance | Security-by-design frameworks |

| Soft Skills | Problem-solving, collaboration, communication | Team-based streaming projects |

Open source and skill development remain central to advancing streaming technologies and building high-performing data streaming teams.

Edge Computing & Real-Time Data

Processing at the Edge

Edge computing transforms how organizations handle streaming workloads by moving computation closer to data sources. This approach enables immediate analysis and action, especially in environments where connectivity to centralized data centers is limited or unreliable. Common use cases for edge-based streaming include:

Autonomous vehicles process sensor data in real time to support safe navigation and vehicle platooning.

Oil and gas companies monitor remote assets, reducing downtime and improving safety by analyzing equipment data locally.

Smart grids use edge analytics to balance energy loads and integrate renewable sources efficiently.

Predictive maintenance systems detect equipment anomalies early by processing IoT sensor data at the source.

Hospitals deploy on-site patient monitoring, ensuring privacy and delivering instant clinical alerts.

| Use Case | Description |

| Autonomous Vehicles | Enables real-time sensor data processing for safety-critical decisions and autonomous platooning of trucks. |

| Remote Monitoring of Oil and Gas | Processes data near remote equipment to allow real-time analytics and reduce reliance on cloud connectivity. |

| Smart Grid | Provides real-time energy usage visibility and management, optimizing consumption and grid stability. |

| Predictive Maintenance | Processes IoT sensor data locally to detect anomalies and predict equipment failures, reducing downtime. |

| In-Hospital Patient Monitoring | Performs on-site data processing for privacy, real-time alerts, and detailed patient condition dashboards. |

Edge processing reduces the need for constant cloud communication, which lowers bandwidth costs and enhances privacy.

Latency & Local Decisions

Streaming at the edge delivers significant latency improvements. By processing data close to its origin, organizations enable faster local decision-making. This capability proves essential for applications that require immediate responses, such as autonomous vehicles, healthcare monitoring, and industrial automation. Modern streaming frameworks like Apache Flink and Kafka Streams use operator pipelining and real-time model inference to minimize delays.

Edge computing reduces latency, allowing systems to react to events in milliseconds.

Streaming frameworks support real-time model training and inference at the edge, powering AI-driven decisions.

SProBench, a benchmark suite for high-performance streaming, demonstrates that edge platforms can process millions of events per second, supporting scalability and throughput for demanding workloads.

Organizations achieve exactly-once processing guarantees and fault tolerance, which are critical for real-time applications in finance, healthcare, and IoT.

Edge streaming also minimizes communication with centralized servers, which not only reduces latency but also improves data security.

Security & Data Sovereignty

Security and data sovereignty remain top priorities in edge-based streaming environments. Processing data locally on edge devices reduces exposure to interception and large-scale breaches. This approach empowers organizations to comply with regulations such as GDPR and CCPA by keeping sensitive information within geographic boundaries.

| Concern Category | Specific Issues | Recommended Solutions |

| Security Concerns | Increased attack surface, device compromise, data interception, limited patching capabilities | Zero-trust frameworks, hardware encryption, regular over-the-air updates |

| Data Sovereignty Concerns | Multi-geography data handling, data residency, audit readiness | Edge governance policies, geo-fencing, edge-native compliance tools |

Local AI processing at the edge ensures that personal identifiers and sensitive data never leave the device. This strategy limits cybersecurity risks and maintains control over data location. However, organizations face challenges such as fragmented IoT ecosystems, proprietary systems, and limited cybersecurity expertise. Standardized security protocols and open-source initiatives help address these barriers, supporting secure and compliant streaming at the edge.

Security, Governance & Challenges

Security in Real-Time Data

Security stands as a top priority in real-time data streaming. Organizations face constant threats from cyberattacks, data breaches, and insider risks. Real-time data often contains sensitive information, such as financial transactions or personal health records. Attackers target these streams because they move quickly and may bypass traditional security controls.

Companies implement encryption for data in transit and at rest. They use strong authentication and authorization to control access. Zero-trust frameworks help limit exposure by verifying every user and device. Regular vulnerability assessments and automated patching reduce the risk of exploitation. Monitoring systems detect unusual activity, such as spikes in data volume or unauthorized access attempts. Security teams respond quickly to alerts, minimizing potential damage.

Real-time data security requires continuous vigilance and proactive defense strategies.

Governance & Compliance

Governance and compliance shape how organizations manage real-time data. Regulations like GDPR, CCPA, and HIPAA demand strict controls over data collection, storage, and sharing. Companies must track where data originates, how it moves, and who accesses it. Data lineage tools provide visibility into these flows, supporting audits and compliance checks.

Organizations set clear data retention policies to avoid unnecessary storage and reduce risk. Automated data classification helps identify sensitive information and apply appropriate protections. Role-based access ensures only authorized users can view or modify critical data. Regular training keeps employees aware of compliance requirements and best practices.

A strong governance framework builds trust with customers and partners. It also reduces the risk of fines and reputational harm. Companies that prioritize compliance gain a competitive edge in regulated industries.

Adoption Challenges

Adopting real-time data streaming presents several challenges. Scalability, integration, cost, and organizational readiness often slow progress. Many organizations struggle to process large data volumes without delays. Integrating new streaming platforms with legacy systems can prove complex. Costs rise when teams over-provision resources or lack efficient scaling strategies.

To overcome these barriers, organizations use several effective strategies:

Partition data into segments for parallel processing, which distributes workload and reduces latency.

Add nodes dynamically to support horizontal scaling as data loads increase.

Optimize network protocols and processing logic to minimize latency and improve responsiveness.

Use dynamic resource allocation and autoscaling to match resources with demand, improving cost efficiency.

Maintain state information for complex event processing, enabling seamless integration with other systems.

Set up monitoring and alerting systems to detect performance issues and control costs.

Adopt event-driven architectures and multi-tiered storage for flexible integration and efficient data management.

Leverage managed services and serverless compute models to reduce infrastructure complexity and operational overhead.

Organizations that address these challenges with a structured approach unlock the full value of real-time data streaming. They achieve greater agility, lower costs, and improved business outcomes.

Data leaders recognize the urgency of adopting stream processing and real-time analytics. These trends drive measurable business value and deliver a competitive edge. Teams that act now position their organizations for rapid innovation and operational excellence.

Build AI-ready, real-time data infrastructures.

Invest in unified analytics platforms and scalable streaming solutions.

Foster a culture of continuous learning and adaptation.

Staying ahead requires commitment to evolving technologies and strategic investment in data-driven capabilities.

FAQ

What is stream processing?

Stream processing analyzes data as it arrives, enabling organizations to act on information instantly. This approach supports real-time decision-making and powers applications like fraud detection, predictive maintenance, and personalized recommendations.

How does stream processing differ from batch processing?

Batch processing handles large data sets at scheduled intervals. Stream processing works with continuous data flows, delivering insights in real time. Companies use stream processing for immediate actions, while batch processing suits historical analysis.

Why do businesses invest in real-time analytics?

Businesses gain a competitive edge with real-time analytics. They improve customer experiences, reduce operational costs, and respond quickly to market changes. Real-time insights drive innovation and support data-driven strategies.

Which industries benefit most from stream processing?

Finance, healthcare, manufacturing, retail, and logistics see the greatest impact. These sectors use real-time data to enhance security, optimize operations, and personalize services.

What challenges do organizations face with stream processing adoption?

Organizations encounter scalability, integration, and cost challenges. Legacy systems, data quality, and compliance requirements also create obstacles. Teams address these issues with unified architectures, managed services, and continuous monitoring.

How does AI enhance stream processing?

AI enables predictive and prescriptive analytics on streaming data. Models learn from live information, adapt to new patterns, and automate decisions. This integration improves accuracy and supports advanced use cases.

What security measures protect real-time data streams?

Companies use encryption, strong authentication, and zero-trust frameworks. Regular monitoring and automated patching help prevent breaches. Data lineage tools and access controls ensure compliance and protect sensitive information.

Subscribe to my newsletter

Read articles from Community Contribution directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by