1. Introduction and Understanding ECS

Arindam Baidya

Arindam Baidya

Course Introduction

Hello everyone! My name is Sanjeev Thiyagarajan, and I will be your instructor for this lesson.

In this article, we will explore AWS Elastic Container Service (ECS) and learn how to deploy containerized applications efficiently. We will start with the fundamentals of ECS, covering:

An overview of ECS and how it works

ECS components, including tasks, task definitions, and services

A comparison of the different launch types and their best-use scenarios

Once you have a solid understanding of ECS concepts, we will move on to practical deployments by building applications on ECS. During this phase, you will learn essential configurations, their purposes, and when to use each option.

Finally, we will cover how to integrate an AWS load balancer to effectively manage traffic for your ECS cluster, ensuring scalability and high availability.

Let's begin our journey into ECS and discover why it is an excellent choice for deploying containerized applications.

What is ECS

Amazon Elastic Container Service (ECS) is AWS’s managed container orchestration service. It functions similarly to orchestration tools like Docker Swarm and Kubernetes but is exclusively provided and maintained by AWS.

Container Orchestration Explained

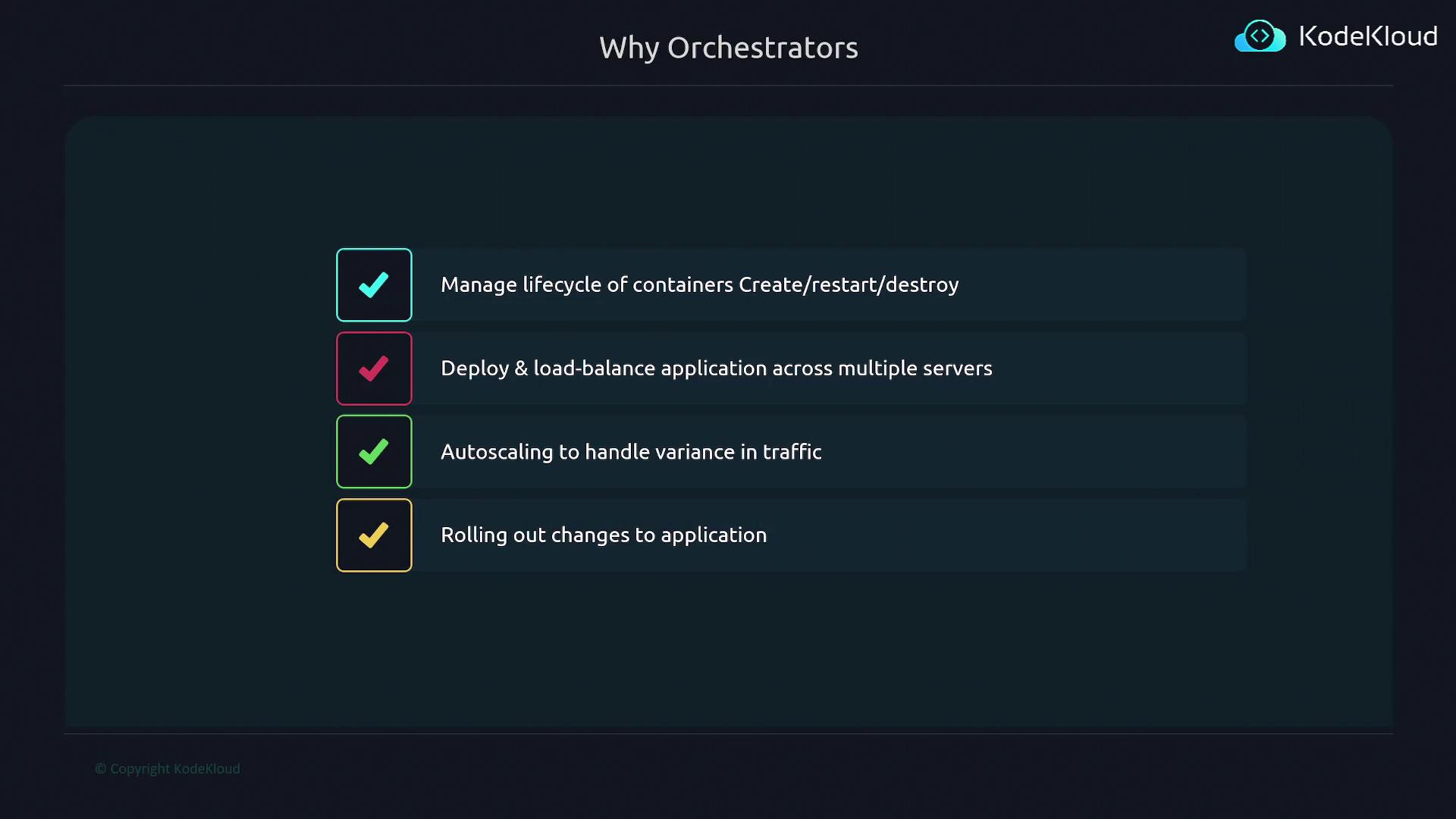

A container orchestrator manages the lifecycle of containers by performing key functions such as:

Identifying available resources or servers to schedule and run containers.

Creating and deploying container instances.

Monitoring container health and replacing or restarting containers if they crash.

Distributing and load balancing applications across multiple servers.

Note

Using a single server environment with tools like Docker or Docker Compose may work during development, but in a production setting, it creates a single point of failure.

Advantages of Using ECS

Deploying an application on one server only risks downtime if that server fails. ECS overcomes this limitation by distributing your application across several servers. In the event of a server failure, the orchestrator ensures that other containers take over, providing high availability.

Another significant benefit is auto scaling. Imagine a scenario where your small application experiences a sudden spike in traffic. ECS automatically provisions additional container instances to handle the increased load. Once the demand subsides, it scales down the resources to optimize costs.

Seamless Application Updates

When you update your application's code, ECS enables smooth rollouts with minimal disruption. This streamlined update process ensures that new changes are deployed efficiently across your environment, preserving the user experience and reducing downtime.

Summary

ECS automates key processes in container management, including:

Container lifecycle management

Deployment and load balancing

Auto scaling based on demand

Seamless rollout of application updates

This robust orchestration system makes ECS an ideal solution for managing containerized applications in production environments.

For more detailed information about container orchestration and related AWS services, visit the AWS Documentation.

ECS vs Others

In this article, we explore how to deploy an application in a plain Docker environment—without relying on an orchestrator. Traditionally, most users employ the Docker run command or Docker Compose for container deployment. However, these approaches have limitations, especially in multi-server environments and dynamic scaling.

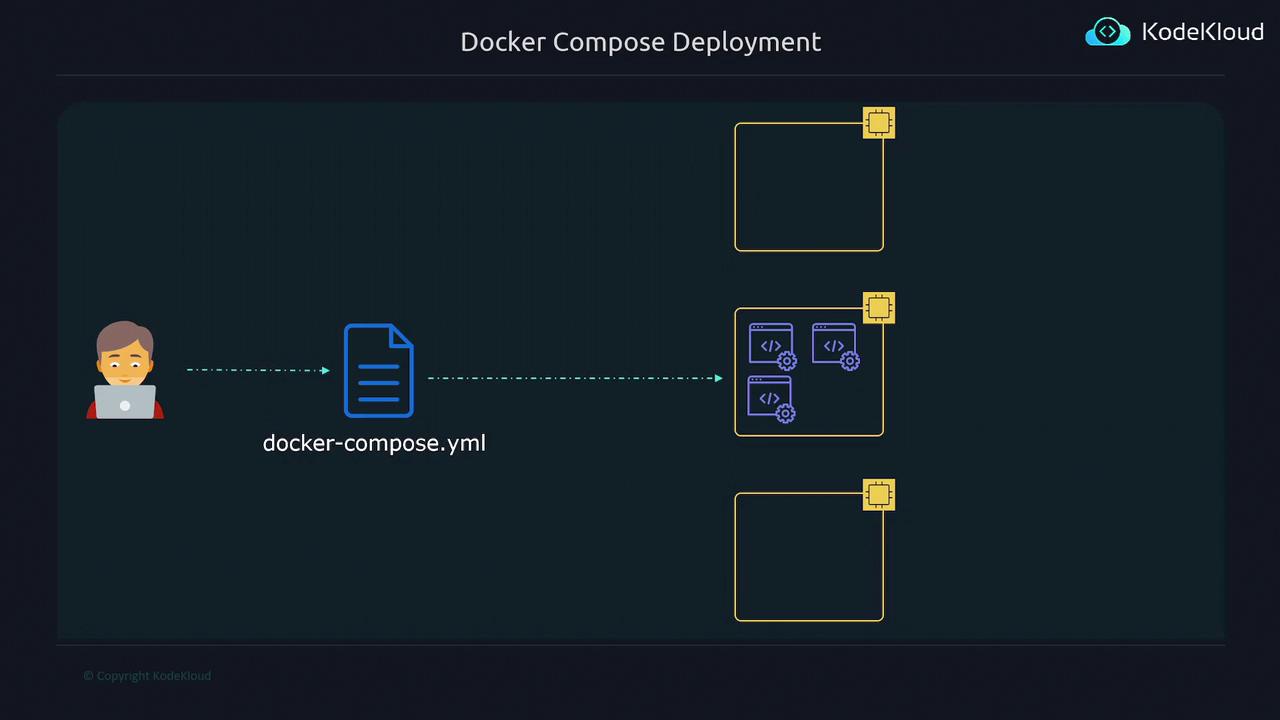

Deploying with Docker Compose

A typical Docker Compose file encapsulates all container configuration details. Imagine a scenario with multiple servers: using Docker run or Docker Compose manages containers on a single host by default. While you can replicate the Compose file on each server, this method only launches independent instances of your application. There is no inherent coordination among the different server deployments.

Note

Docker Compose is excellent for local development and single-host deployments but lacks cross-host orchestration capabilities.

Scaling Challenges and Continuous Updates

Scaling your application to handle increased traffic in a Docker Compose environment is not seamless. You may need to manually scale up or down, and orchestrated scaling is not automated. Moreover, rolling out updates without causing downtime or disrupting end-user traffic remains a significant challenge with non-orchestrated environments.

Traditional Orchestrators

Traditional container orchestrators such as Kubernetes, Hashicorp Nomad, and Apache Mesos offer robust solutions to these challenges. They provide intelligent scaling, coordinated deployments, and smooth updates. However, these solutions can be complex to set up and maintain, especially for users who need a simpler, streamlined approach.

AWS Elastic Container Service (ECS)

AWS introduced Elastic Container Service (ECS) as a simpler alternative. ECS offers an intuitive graphical interface for specifying application configurations, while AWS manages deployment, scaling, and overall container management behind the scenes.

Key Benefit

ECS simplifies container orchestration by abstracting the heavy lifting of deployment, scaling, and management. This makes it an ideal choice for users looking to avoid the complexities of traditional orchestrators.

Summary Comparison

| Feature | Docker Compose | Traditional Orchestrators | AWS ECS |

| Deployment Scope | Single host only | Multi-host orchestration | Managed multi-host deployments |

| Scaling | Manual scaling | Automated scaling | Automated scaling with simplified GUI |

| Update & Rollouts | Challenging | Seamless updates | Seamless updates managed by AWS |

| Setup Complexity | Minimal | High (requires setup, configuration) | Lower complexity, managed service |

Conclusion

While Docker Compose serves well for isolated or local container deployments, its limitations become evident in multi-server setups and when dynamic scaling is required. Traditional orchestrators offer powerful features but come at the cost of increased complexity. AWS ECS bridges this gap by delivering a user-friendly solution with robust orchestration capabilities.

For further reading on container orchestration and AWS ECS, check out the following resources:

ECS Infrastructure and launch

Amazon Elastic Container Service (AWS ECS) offers two primary launch types for containerized applications: the EC2 launch type and the Fargate launch type. In this article, we delve into both options and explain the underlying infrastructure concepts that power them.

Understanding ECS Clusters

Before exploring the specifics of each launch type, it is essential to understand what an ECS cluster is. An ECS cluster serves as the backbone for container deployment—it orchestrates container operations without providing compute capacity directly.

ECS is designed exclusively for container management and does not include built-in server or compute functionality. When a container is deployed, it must run on a physical or virtual machine. Typically, ECS uses a pool of EC2 instances or similar infrastructure to host these containers. In essence, an ECS cluster is a grouping of compute resources (such as virtual machines on EC2) where your containerized applications run.

Key Point

ECS manages the container lifecycle, while actual compute operations rely on the underlying infrastructure you set up.

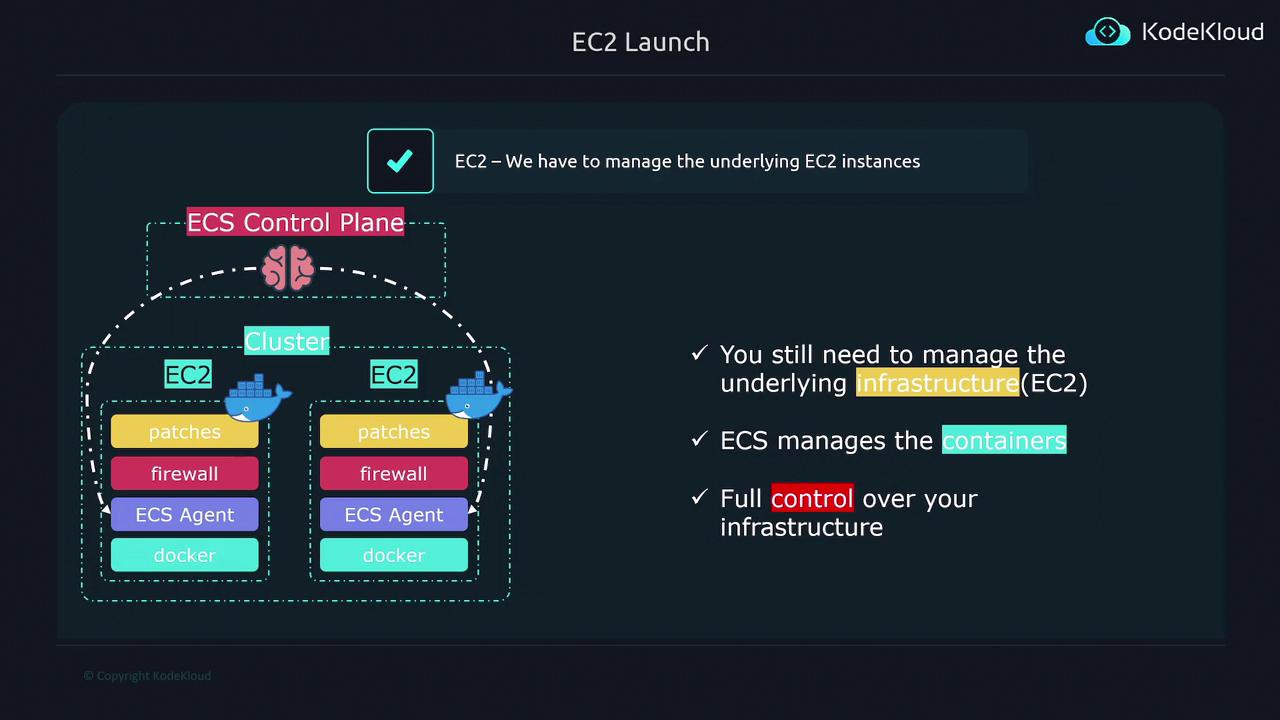

EC2 Launch Type

The EC2 launch type gives you full control over your compute infrastructure. With this configuration, you manage the EC2 instances in your cluster, while ECS handles the orchestration of container operations. Consider the following key points when using the EC2 launch type:

The ECS control plane orchestrates container operations but does not manage compute resources.

You are responsible for provisioning and managing the EC2 instances that form your cluster.

Every EC2 instance must have Docker installed to run containers and must run the ECS agent, which enables communication with the ECS control plane.

Routine tasks such as configuring firewalls, applying security patches, and performing upgrades are handled by you.

Summary

When using the EC2 launch type, ECS simplifies container lifecycle management while you maintain full control over the infrastructure, allowing customized configuration and security management.

EC2 vs Fargate

AWS Fargate revolutionizes container management by eliminating the need to manage the underlying server infrastructure. With ECS Fargate, you can concentrate on deploying your containerized applications without worrying about provisioning, managing, or scaling physical servers. While your ECS control plane and clusters remain identical to traditional setups, Fargate introduces a serverless architecture that abstracts away the compute infrastructure.

Key Benefit

One of the primary advantages of using Fargate is cost efficiency. You are billed solely for the resources you consume, as Fargate automatically provisions and decommissions compute resources based on your application's demand.

When you deploy an application to Amazon ECS using Fargate, the process unfolds as follows:

Amazon ECS detects that there are no available servers to host your application.

ECS then seamlessly communicates with Fargate.

Fargate dynamically provisions the required compute resources on demand.

Once these resources are available, ECS deploys your containers onto the newly created infrastructure.

This serverless approach ensures that when you scale down or remove your application, Fargate decommissions the underlying resources automatically, thereby eliminating costs associated with idle servers.

ECS task

In this article, we will explore the essential components required to configure an ECS task, beginning with the ECS task definition file.

Overview

Before diving into the task definition, ensure your application is dockerized successfully using a Dockerfile. Once you build your Docker image, you can upload it to Docker Hub or your preferred container repository.

Dockerizing Your Application

The process starts with dockerizing your application. When your Dockerfile is ready, build a Docker image and then upload it to a container repository like Docker Hub. This image serves as the base for your ECS task configuration.

Creating an ECS Task Definition

After successfully uploading your Docker image, the next step is to create an ECS task definition file. This file acts as a blueprint for how your container should be launched and configured within ECS. It includes important configurations such as:

CPU allocation

Memory limits

The Docker image to use

Ports to expose

Volumes to attach

Essentially, an ECS task definition file is similar in purpose to a Docker Compose file. Consider the following basic example:

web:

image: kodekloud-web

ports:

- "8000:5000"

volumes:

- ./code

depends_on:

- redis

deploy:

resources:

limits:

cpus: '0.50'

memory: 50M

This configuration specifies the behavior and resource allocation for your container. One task definition file can include configurations for multiple containers, enabling you to either run your entire application from a single file or split it into separate files as needed.

Understanding ECS Tasks vs. Task Definitions

It's important to distinguish between a task definition and a task:

Task Definition: The blueprint that outlines how your container should run (including CPU, memory, and other configurations).

Task: An instance of a task definition; the actual running container that adheres to the blueprint defined.

If you require multiple instances of your application, you simply create additional tasks based on the same task definition.

Key Insight

Think of a Docker image as the blueprint for a container. In a similar manner, the ECS task definition provides the blueprint for how your container should operate. The running container, or ECS task, is the instantiated version of that blueprint.

Summary Table: ECS Task Components

| Component | Description | Example Config Option |

| Docker Image | The base image created from your Dockerfile | kodekloud-web |

| Task Definition | Blueprint for container configurations | CPU, Memory, Ports, Volumes |

| ECS Task | Running instance of a task definition | Two or more tasks per definition |

By following these guidelines, you'll be able to set up your ECS tasks efficiently and ensure that your container configurations are correctly implemented for scalable applications.

For more detailed information about container orchestration and ECS, you can explore the following resources:

Happy containerizing!

ECS Services

In this article, we will explore the concept of an ECS service and how it ensures consistent and reliable operations for your containerized applications.

What is a Service?

An ECS service is designed to maintain a specified number of task instances running at all times. Suppose you have a simple Python application and require two running instances (or containers) at all times. By configuring the ECS service with the desired count of two, the service guarantees that exactly two instances of your application are active.

If no instances are running when the service is initiated, it will launch two new instances and deploy them across the available servers within the cluster.

Self-Healing Mechanism

ECS services constantly monitor the running tasks. If any container crashes or stops unexpectedly, the service will automatically restart the affected container. This self-healing feature ensures that the intended number of instances is maintained, providing continuous availability for your application.

Moreover, ECS services also keep an eye on the underlying EC2 instances. In the event that one of these instances fails, the service will detect the failure, cease tasks on the problematic instance, and relaunch them on healthy EC2 instances.

By utilizing ECS services, you can achieve high availability and seamless scalability for your containerized applications, ensuring an optimal balance between performance and resilience.

Load Balancers

This article explores the significance of load balancers in managing network traffic for scalable applications. Load balancers act as intelligent traffic managers that ensure incoming requests are evenly distributed across multiple service instances, thus enhancing both performance and fault tolerance.

Imagine deploying an application with multiple instances running on various servers. In such a scenario, a load balancer directs external traffic efficiently to these instances, ensuring that workloads are balanced across all available resources.

When you assign a load balancer to a service, it continuously monitors the status of each instance and its corresponding server. Upon receiving traffic, the load balancer intelligently routes the requests, thereby improving the application's responsiveness and resilience.

Scalability Insight

As you scale your application by adding new instances, the load balancer automatically detects these enhancements and begins to distribute traffic to them. This dynamic adjustment ensures consistent performance even as your application grows.

By integrating a load balancer into your architecture, you improve resource utilization and ensure smoother service delivery. This integration is key to maintaining a robust, scalable, and fault-tolerant application infrastructure.

For further details on load balancing strategies and more, consider exploring these resources:

Subscribe to my newsletter

Read articles from Arindam Baidya directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Arindam Baidya

Arindam Baidya

🚀 Aspiring DevOps & Cloud Engineer | Passionate about Automation, CI/CD, Containers, and Cloud Infrastructure ☁️ I work with Docker, Kubernetes, Jenkins, Terraform, AWS (IAM & S3), Linux, Shell Scripting, and Git to build efficient, scalable, and secure systems. Currently contributing to DevOps-driven projects at Assurex e-Consultant while continuously expanding my skills through hands-on cloud and automation projects. Sharing my learning journey, projects, and tutorials on DevOps, AWS, and cloud technologies to help others grow in their tech careers. 💡 Let’s learn, build, and innovate together!