Ship small, ship often: Practical Kubernetes CI/CD on a budget (GitHub Actions + Helm)

Leandro Nuñez

Leandro NuñezAudience: freelancers and small teams who need reliable, inexpensive delivery to Kubernetes. This is a long, hands-on guide with lots of copy-pasteable code and extra explanations.

TL;DR

Build Docker images in GitHub Actions, tag each image with the commit SHA, push to GitHub Container Registry (GHCR) using the built-in

GITHUB_TOKEN, and deploy to Kubernetes with Helm. This keeps infra simple and costs low because your code, CI, registry, and permissions all live inside GitHub. (GitHub Docs)Rely on Kubernetes Deployments for rolling updates, and wire readiness + liveness probes so pods don’t get traffic until they’re ready (and get restarted if they hang). Make the pipeline wait with

helm --wait --atomicandkubectl rollout statusso a “green” job actually means the app is healthy. (Kubernetes, Helm)Three recurring mistakes I see: (1) shipping

:latest, (2) not waiting for rollouts, (3) giving CI cluster-admin. Fixes: tag or digest-pin images, wait on the rollout, and scope deploy permissions with RBAC in the target namespace. (Kubernetes)

Table of Contents

Why this stack fits freelancers

The architecture in one picture

One-time setup & context you’ll need

A tiny sample app (Node.js) you can actually run

Dockerfile: multi-stage build, explained

Helm chart structure & values: what’s going on and why

CI: GitHub Actions workflow to build/tag/push (with caching)

CD: Helm upgrade, safe timeouts, and rollout checks

Health probes that prevent surprise downtime

Pitfalls I see weekly (and how to avoid them)

Minimal RBAC for CI (no more cluster-admin)

Private registries &

imagePullSecrets(GHCR note)Rollbacks, who does what, and a quick “am I healthy?”

A reusable repo layout & a secrets checklist

Optional: the same loop with Kustomize

Appendix: extra CI steps (tests, concurrency, smoke checks)

References

1) Why this stack fits freelancers

When you’re solo or working with a tiny team, the time you don’t spend gluing tools together is time you can bill. The combination below keeps blast radius small and the moving parts understandable:

GitHub Actions + GHCR: you already store your code in GitHub; Actions runs your builds and GHCR keeps your images in the same trust boundary. With proper workflow permissions, the built-in

GITHUB_TOKENis enough to push images—no extra tokens to rotate, no additional accounts to manage. (GitHub Docs)Helm: clients expect Helm charts. Charts are just templates with a clear place for defaults (

values.yaml), and a simple way to override them per environment. (Helm)Kubernetes Deployments: rolling updates are the default—Kubernetes gradually replaces old pods with new ones and only declares success after the new ReplicaSet becomes ready. That’s near-zero downtime when you wire probes and wait for the rollout. (Kubernetes)

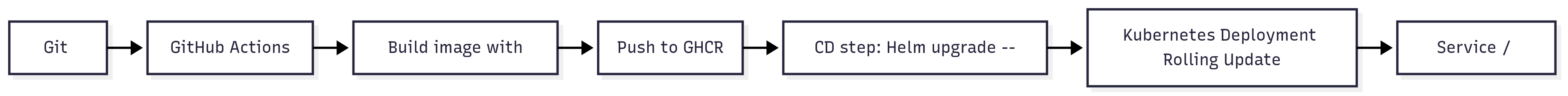

2) The architecture in one picture

The last step matters: your pipeline blocks until the Deployment is actually healthy (or times out), which means green pipelines line up with a healthy app, not just “manifests applied”. (Kubernetes)

3) One-time setup & context you’ll need

kubectl on the runner and on your machine. Use the official install so versions match your cluster’s supported skew (typically ±1 minor). (Kubernetes)

Helm v3 on the runner. The official install page documents the script many people use in CI. (Helm)

GHCR enabled in your org/repo. Add the OCI label

org.opencontainers.image.sourcein your Dockerfile so GHCR auto-links the package to your repo; useGITHUB_TOKENwithpackages: writescoped by workflow permissions. (GitHub Docs)

Why versions/skew matters: if your kubectl is too far ahead/behind, you’ll chase weird errors. The official docs call out the “within one minor” rule. (Kubernetes)

4) A tiny sample app (Node.js) you can actually run

Two endpoints that map directly to Kubernetes probes:

// app/server.js

import http from 'node:http';

const port = process.env.PORT || 3000;

let ready = false;

// Simulate warm-up work: DB connection, JIT, caches...

setTimeout(() => (ready = true), 3000);

const server = http.createServer((req, res) => {

if (req.url === '/livez') return res.end('ok'); // liveness probe

if (req.url === '/healthz') return res.end(ready ? 'ok' : 'starting'); // readiness probe

res.writeHead(200, { 'content-type': 'text/plain' });

res.end('hello');

});

server.listen(port, () => console.log(`listening on ${port}`));

A minimal package.json so CI can run tests quickly:

{

"name": "demo-app",

"type": "module",

"scripts": {

"start": "node app/server.js",

"test": "node -e \"const http=require('http');http.get('http://127.0.0.1:3000',r=>process.exit(r.statusCode===200?0:1))\""

},

"dependencies": {}

}

The point of /healthz and /livez: Kubernetes treats them differently—readiness gates traffic; liveness restarts stuck containers. We’ll wire both into the Deployment in a moment. (Kubernetes)

5) Dockerfile: multi-stage build, explained

Why multi-stage? Build tools (compilers, bundlers) aren’t needed at runtime. Splitting build and runtime stages makes smaller, faster, and safer images. The official Docker docs explicitly recommend multi-stage builds for production. (Docker Documentation)

# Dockerfile

# -------- build stage --------

FROM node:20 AS build

WORKDIR /app

COPY package*.json ./

RUN npm ci --omit=dev

COPY . .

# leave only prod deps

RUN npm prune --omit=dev

# -------- runtime stage --------

FROM node:20-slim

WORKDIR /app

COPY --from=build /app ./

EXPOSE 3000

CMD ["node","app/server.js"]

# helps GHCR link this image to your repo automatically

LABEL org.opencontainers.image.source="https://github.com/your-org/your-repo"

If you ever need multi-arch images (arm64 laptop, amd64 server), Buildx and QEMU make that possible with one workflow. We’ll stick to a single arch for speed. (Docker Documentation)

6) Helm chart structure & values: what’s going on and why

Helm charts are folders with a specific layout; defaults live in values.yaml, and templates live under templates/. You can override defaults per environment with --values path.yaml or inline --set key=value. (Helm)

chart/Chart.yaml

apiVersion: v2

name: app

description: Minimal demo app

type: application

version: 0.1.0 # chart version

appVersion: "0.1.0" # your app version (for humans; not enforced)

chart/values.yaml (defaults you’ll override in CI)

image:

repository: ghcr.io/your-org/your-repo/app

tag: "CHANGE_ME" # CI will set this to the commit SHA

pullPolicy: IfNotPresent

service:

type: ClusterIP

port: 80

targetPort: 3000

replicaCount: 2

resources:

requests: { cpu: 100m, memory: 128Mi }

limits: { cpu: 500m, memory: 256Mi }

# optional if using private images

# imagePullSecrets:

# - name: ghcr-pull

chart/templates/deployment.yaml (probes + rolling update)

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "app.fullname" . }}

labels:

app.kubernetes.io/name: {{ include "app.name" . }}

spec:

replicas: {{ .Values.replicaCount }}

strategy: { type: RollingUpdate }

selector:

matchLabels:

app.kubernetes.io/name: {{ include "app.name" . }}

template:

metadata:

labels:

app.kubernetes.io/name: {{ include "app.name" . }}

spec:

{{- if .Values.imagePullSecrets }}

imagePullSecrets:

{{- range .Values.imagePullSecrets }}

- name: {{ .name }}

{{- end }}

{{- end }}

containers:

- name: app

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

ports:

- containerPort: {{ .Values.service.targetPort }}

readinessProbe:

httpGet: { path: /healthz, port: {{ .Values.service.targetPort }} }

initialDelaySeconds: 5

periodSeconds: 5

livenessProbe:

httpGet: { path: /livez, port: {{ .Values.service.targetPort }} }

initialDelaySeconds: 15

periodSeconds: 10

chart/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: {{ include "app.fullname" . }}

spec:

type: {{ .Values.service.type }}

selector:

app.kubernetes.io/name: {{ include "app.name" . }}

ports:

- port: {{ .Values.service.port }}

targetPort: {{ .Values.service.targetPort }}

If you prefer a more DRY approach, add a _helpers.tpl to standardize names/labels. Helm’s best-practices guide covers how to structure values and helpers cleanly. (Helm)

7) CI: GitHub Actions to build, tag, and push (with caching)

We’ll use Docker’s official Actions: setup-buildx, login, and build-push. We tag the image with the commit SHA so every deploy is traceable and immutable. Enable packages: write for GITHUB_TOKEN. (GitHub, GitHub Docs)

.github/workflows/ci.yml

name: ci

on:

push:

branches: [ "main" ]

permissions:

contents: read

packages: write # allow pushing to GHCR (least privilege)

jobs:

build-and-push:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

# optional: speed up local tests

- uses: actions/setup-node@v4

with:

node-version: '20'

cache: 'npm'

- run: npm ci

- run: npm test

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Log in to GHCR

uses: docker/login-action@v3

with:

registry: ghcr.io

username: ${{ github.actor }}

password: ${{ secrets.GITHUB_TOKEN }}

- name: Build and push image

uses: docker/build-push-action@v6

with:

context: .

push: true

tags: ghcr.io/${{ github.repository }}/app:${{ github.sha }}

cache-from: type=gha

cache-to: type=gha,mode=max

A few gotchas here:

Workflow permissions must allow writing packages, or GHCR will reject pushes. You can set defaults in repo settings and still restrict per workflow/job. (GitHub Docs)

Labeling images with

org.opencontainers.image.sourcehelps GHCR connect the package back to the repo UI. (GitHub Docs)Buildx is the recommended way to build images in Actions, with caching and multi-arch support documented in Docker’s CI guide. (Docker Documentation)

Why SHA tags instead of :latest? Kubernetes treats image references literally. Mutable :latest tags make it harder to know what’s running and can trip pull-policy defaults. Use an immutable tag (SHA) or even a digest (image@sha256:...) for perfect reproducibility—Kubernetes supports digests natively. (Kubernetes)

8) CD: Helm upgrade, safe timeouts, and rollout checks

Deploy right after a successful push. helm upgrade --install handles both first-time and repeat deploys. Add --wait (block until ready), --timeout (don’t wait forever), and --atomic (automatic rollback on failure). Then, as an extra safety net, run kubectl rollout status to stream progress into the job log. (Helm, Kubernetes)

deploy:

needs: build-and-push

runs-on: ubuntu-latest

environment: production

steps:

- uses: actions/checkout@v4

- name: Install kubectl (official)

run: |

curl -LO "https://dl.k8s.io/release/$(curl -Ls https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

chmod +x kubectl && sudo mv kubectl /usr/local/bin/

- name: Install Helm

run: curl -fsSL https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

- name: Configure kubeconfig

env: { KUBECONFIG_DATA: ${{ secrets.KUBECONFIG_DATA }} }

run: |

mkdir -p $HOME/.kube

echo "$KUBECONFIG_DATA" | base64 -d > $HOME/.kube/config

- name: Helm upgrade (create or update)

run: |

helm upgrade --install app ./chart \

--namespace prod --create-namespace \

--set image.tag=${{ github.sha }} \

--wait --timeout=10m --atomic

- name: Rollout status (extra verification)

run: kubectl rollout status deploy/app -n prod --timeout=180s

Why both --wait and kubectl rollout status? Helm’s --wait bubbles up a release-level success/fail; kubectl rollout status prints per-step progress and gives fast, readable feedback when something’s off. Helm’s --atomic turns failures into an automatic rollback—useful when you’d rather be safe than “half updated”. (Helm, Kubernetes)

9) Health probes that prevent surprise downtime

You can have a perfect rolling update and still serve errors if the app accepts traffic before it’s warmed up. That’s what readiness probes prevent: they gate Service endpoints until your pod says “ready.” Liveness probes are your safety rope if the app wedges itself; the kubelet restarts the container after repeated failures. The official docs cover these semantics and when to use startup probes for slower apps. (Kubernetes)

Practical tuning guidance:

If your app needs 20s to warm up, set

initialDelaySecondson readiness accordingly.If your app occasionally blocks (e.g., deadlock), prefer an HTTP or TCP liveness probe that hits a trivial endpoint or port.

If your app is slow to start, add a startup probe to delay liveness until boot completes. (Kubernetes)

10) Pitfalls I see weekly (and how to avoid them)

10.1 Shipping :latest

What goes wrong: The same tag points to different bits over time; pull cache and policy quirks make it unclear whether a node actually pulled the new image.

The fix: Tag images by commit SHA or use digests (

@sha256:...). Kubernetes supports digest references; it will always fetch the exact image. The official image docs explain how Kubernetes resolves image references, tags, and digests. (Kubernetes)

10.2 Not waiting for rollouts

What goes wrong: Your pipeline finishes “green,” but users see errors for 30–60 seconds because the Deployment hasn’t converged yet.

The fix: Add

--wait --timeout(and optionally--atomic) to Helm, and runkubectl rollout statusso failures show up clearly in logs. (Helm, Kubernetes)

10.3 Over-privileged CI (cluster-admin)

What goes wrong: A leaked token equals full cluster compromise.

The fix: Create a ServiceAccount + Role + RoleBinding scoped to the target namespace, with only the verbs and resources your chart needs. The RBAC docs call this model out explicitly. (Kubernetes)

11) Minimal RBAC for CI (copy/paste)

# rbac/deployer.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: helm-deployer

namespace: prod

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: helm-deployer

namespace: prod

rules:

- apiGroups: ["apps"]

resources: ["deployments","replicasets"]

verbs: ["get","list","watch","create","update","patch"]

- apiGroups: [""]

resources: ["services","configmaps","secrets"]

verbs: ["get","list","watch","create","update","patch"]

- apiGroups: ["networking.k8s.io"]

resources: ["ingresses"]

verbs: ["get","list","watch","create","update","patch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: helm-deployer

namespace: prod

subjects:

- kind: ServiceAccount

name: helm-deployer

namespace: prod

roleRef:

kind: Role

name: helm-deployer

apiGroup: rbac.authorization.k8s.io

This follows the least privilege principle: the SA can only touch the namespace and resource types your chart manages. If a token leaks, the damage is limited to those verbs/resources in prod. RBAC is the supported authorization model in Kubernetes. (Kubernetes)

12) Private registries & imagePullSecrets (GHCR)

Public GHCR packages: anyone can pull anonymously; no secret required.

Private GHCR packages: create a Docker registry secret and reference it in your pod spec (or attach it to your ServiceAccount). Kubernetes documents both the Secret type and the

imagePullSecretsmechanism. (Kubernetes)

Create a pull secret and use it in prod:

kubectl create secret docker-registry ghcr-pull \

--docker-server=ghcr.io \

--docker-username=YOUR_GITHUB_USERNAME \

--docker-password=YOUR_GHCR_TOKEN_OR_PAT \

--docker-email=you@example.com \

-n prod

Then in values.yaml for prod:

imagePullSecrets:

- name: ghcr-pull

Official docs show the same flow for private registries; GHCR uses standard Docker auth. (Kubernetes)

13) Rollbacks, who does what, and a quick “am I healthy?”

Two layers can help you:

Helm release history →

helm history/rollback.Deployment history →

kubectl rollout undo/status.

Typical commands:

# See release history managed by Helm

helm history app -n prod

# Roll back to the previous release

helm rollback app 1 -n prod

# Verify the Deployment converges

kubectl rollout status deploy/app -n prod --timeout=180s

Helm’s --atomic already auto-rolls back on failures during the upgrade. kubectl rollout status is still useful for transparent logs during manual ops or in CI. (Helm, Kubernetes)

14) A reusable repo layout & a secrets checklist

repo-root/

app/ # your code

Dockerfile

chart/

Chart.yaml

values.yaml

values-staging.yaml

values-prod.yaml

templates/

deployment.yaml

service.yaml

_helpers.tpl

rbac/

deployer.yaml

.github/workflows/ci.yml

Secrets (GitHub → Settings → Secrets and variables → Actions):

KUBECONFIG_DATA– base64 kubeconfig for the helm-deployer ServiceAccount.GITHUB_TOKEN– auto-injected; set workflowpermissions: packages: writeto push to GHCR (use repo/org defaults or YAML). (GitHub Docs)

15) Optional: the same loop with Kustomize

Prefer overlays without templating? Kustomize is built into kubectl. Your pipeline becomes: build → push → kubectl apply -k overlays/prod → kubectl rollout status. It’s great for single-service repos and small customizations; Helm tends to be handier when you need packagability or your client already uses charts. (Kubernetes)

Quick sketch:

kustomize/

base/

deployment.yaml

service.yaml

kustomization.yaml

overlays/

prod/

kustomization.yaml # patches image tag to $GIT_SHA

16) Appendix: extra CI steps (tests, concurrency, smoke checks)

A tiny smoke check after deploy (port-forward for 1 request):

- name: Port-forward and smoke test

run: |

kubectl -n prod port-forward svc/app 8080:80 &

PF_PID=$!

sleep 3

curl -fsS http://127.0.0.1:8080/healthz | grep -q "ok"

kill $PF_PID

Digest pinning (even stricter than tags):

# Check the pushed image's digest

docker buildx imagetools inspect ghcr.io/${OWNER}/${REPO}/app:${GITHUB_SHA}

# Then set in values-prod.yaml:

# image:

# repository: ghcr.io/${OWNER}/${REPO}/app

# tag: "" # leave empty when using a digest

# digest: "sha256:..."

If you want to template digest usage into Helm, adjust your image stanza and template:

# values.yaml (allow either tag OR digest)

image:

repository: ghcr.io/your-org/your-repo/app

tag: "CHANGE_ME"

digest: ""

# templates/deployment.yaml (image reference)

image: "{{ .Values.image.repository }}{{- if .Values.image.digest -}}@{{ .Values.image.digest }}{{- else -}}:{{ .Values.image.tag }}{{- end }}"

Kubernetes understands @sha256: digests directly. It’s the most deterministic way to roll out exactly what you built. (Kubernetes)

Multi-arch builds (if you need arm64): add platforms: linux/amd64,linux/arm64 to build-push-action and include QEMU if needed. Docker’s docs show both. (Docker Documentation)

17) References

Kubernetes Deployments (rolling update behavior & concepts). (Kubernetes) Rolling updates overview (tutorial). (Kubernetes)

kubectl rollout status(command reference). (Kubernetes) Probes (liveness, readiness, startup). (Kubernetes) Images: tags, digests, andimagePullSecrets. (Kubernetes) RBAC authorization (official). (Kubernetes) Installkubectland version skew note. (Kubernetes)Helm

helm upgradeand flags (--install,--wait,--atomic). (Helm) Installing Helm (CI script). (Helm) Chart structure & values reference and best practices. (Helm)GitHub Actions & GHCR Publishing Docker images with Actions. (GitHub Docs) Using

GITHUB_TOKENwith least-privilegepermissions. (GitHub Docs) Working with the Container registry (GHCR) and OCI labels. (GitHub Docs) Docker official GitHub Actions: setup-buildx, login, build-push. (GitHub) Docker’s CI guide for GitHub Actions. (Docker Documentation)Docker Multi-stage builds (why/how), best practices, multi-platform. (Docker Documentation)

Closing thoughts

This is the path I reach for when a client says: “we want reliable deploys this week, not a platform rebuild.” It scales from a single service to many, and every part of it is explainable to non-platform folks:

One chart, one workflow, one registry.

Immutable images (SHA tags or digests), probes that reflect real app health, and rollouts that wait.

Scoped deploy permissions, not cluster-admin.

Stay Connected

If you enjoyed this article and want to explore more about web development, feel free to connect with me on various platforms:

Your feedback and questions are always welcome. Keep learning, coding, and creating amazing web applications.

Subscribe to my newsletter

Read articles from Leandro Nuñez directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by