Building a Hyperconverged Infrastructure: A Journey with Ruijie Switches and Dell Servers

Aadarsha Dhakal

Aadarsha Dhakal

Hey everyone,

As an IT Infrastructure Engineering Intern, I've had the incredible opportunity to dive headfirst into the world of hyperconverged infrastructure (HCI). It's been a whirlwind of learning, problem-solving, and hands-on experience, and I couldn't be more excited to share my journey with you.

Let's start by breaking down the components of our setup. We're working with two Ruijie Company Switches, specifically the RG-CS86-24XMG4XS4VS-UPD model. These bad boys pack quite the punch with an ARM processor clocked at 1.25 GHz, 1GB of flash memory, and 1GB of SDRAM. With a plethora of ports ranging from 100M to 10GE, including support for PoE/PoE+/PoE++ and modular power supply and fan slots, these switches are the backbone of our infrastructure.

But what good are switches without servers, right? That's where the Dell Poweredge R6525 servers come into play. We've got three of these powerhouses ready to rock and roll. Equipped with powerful processors, ample memory, and storage capabilities, these servers are the muscle behind our HCI setup.

Now, let's talk about the heart and soul of our hyperconverged infrastructure: the software. We've chosen Proxmox VE as our hypervisor and CEPH for cluster storage. Proxmox VE provides us with a robust virtualization platform that's not only easy to manage but also offers high availability and scalability. And with CEPH handling our storage needs, we can ensure data redundancy, fault tolerance, and seamless scalability across our cluster.

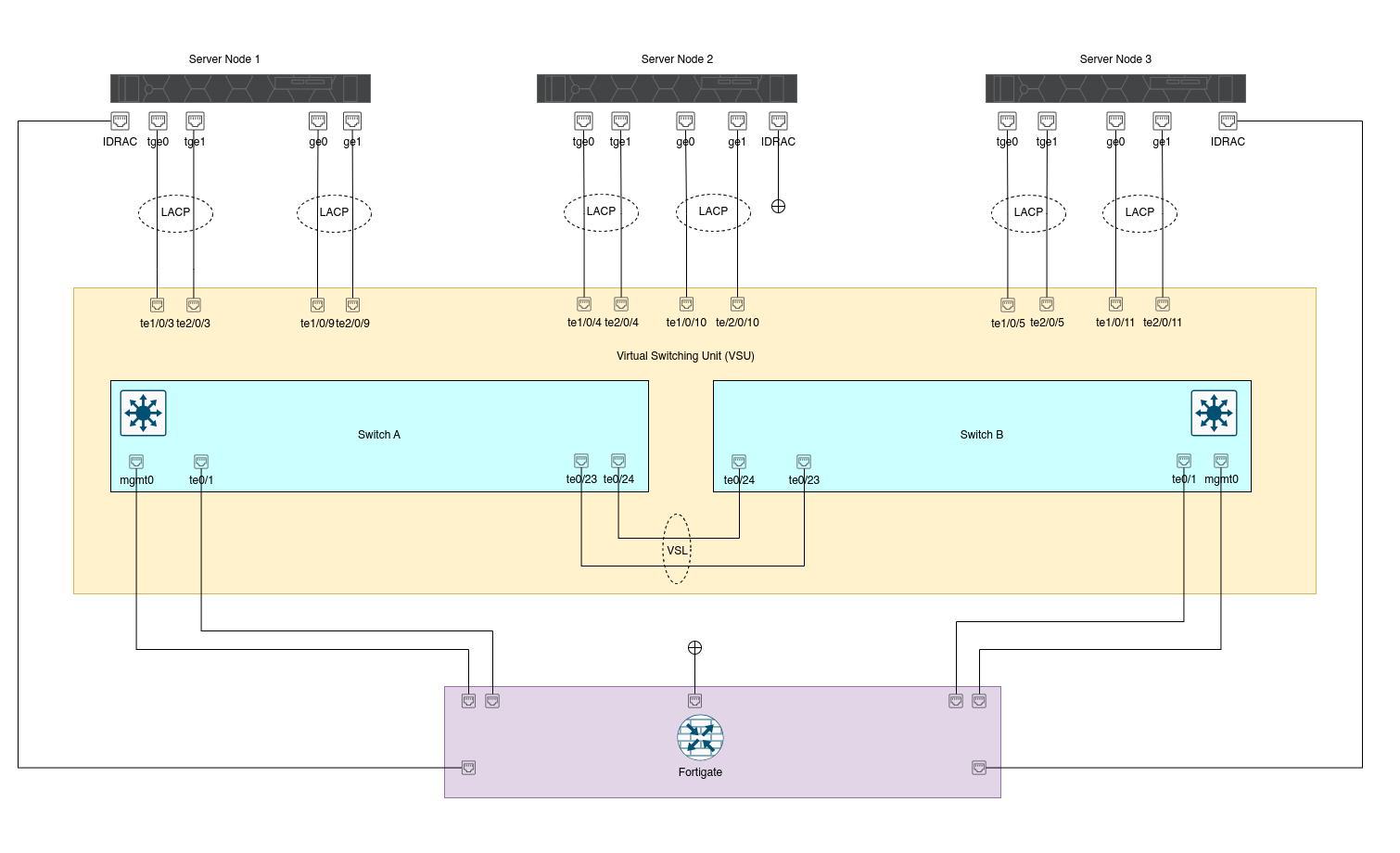

Here is a simple Network Diagram, what we are going to cover in the next few articles.

Let me explain the network diagram to you. We'll mostly skip the Fortinet part for now, as it's not relevant to this series.

Here's the bigger picture of what we'll do:

We'll stack two switches and configure Link Aggregation. We'll define aggregatePort 3, 4, and 5 for server internal communication and aggregatePort 9, 10, and 11 for server management. The links from aggregate port 3, 4, and 5 will connect to the ten-gigabit interfaces of the servers, while links from aggregate port 9, 10, and 11 will connect to the one-gigabit interfaces of the servers. We'll use interface 1 for the uplink and the management port as the management interface.

To manage the Dell server remotely, we'll set up iDRAC. For iDRAC, we'll connect the Fortinet and iDRAC port directly.

On the server, we'll install Proxmox VE, which is a KVM-based open-source hypervisor. We'll then create a cluster of all three nodes. After that, we'll set up Ceph for storage clustering.

Also, we'll create two bonded interfaces: one for server internal communication, which will consist of two ten-gigabit interfaces, and another for management, which will consist of two one-gigabit interfaces.

Additionally, we'll set up VLAN 5 for Ceph and VLAN 4 for VM migration.

Are you excited ??

As we embark on this journey, I'll be sharing my insights, challenges, and triumphs with you all. From configuring our switches and servers to setting up our hypervisor and storage cluster, there's a lot to cover. But fear not, I'll do my best to explain the technical jargon along the way without overwhelming you.

So buckle up, folks! We're about to dive deep into the world of hyper-converged infrastructure, and I can't wait to take you along for the ride.

Subscribe to my newsletter

Read articles from Aadarsha Dhakal directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by