Proxmox Virtual Environment Storage-Part 06

Rony Hanna

Rony Hanna

In this lesson, we will learn and understand Proxmox VE storage, we will use "QuantaStor Software Defined Storage" SAN/NAS (Storage Area Network/Network Attached Storage) as VSA (Virtual Storage Appliance) for the upcoming lessons to demonstrate and simulate Proxmox storage types, live migration of virtual machines between nodes in a cluster, reducing downtime and high availability.

Introduction

Proxmox Virtual Environment (Proxmox VE) offers a robust storage model designed to cater to various storage requirements within virtualized environments. This model encompasses different storage types, storage management features, and integration options. Additionally, Proxmox VE provides a Storage Library package, also known as libpve-storage-perl, which extends storage management capabilities by offering additional functionalities and tools.

Proxmox VE Administration Guide

Proxmox VE Storage

Storage Types: Proxmox VE supports diverse storage types, including local storage (e.g., local disks), network-based storage (e.g., NFS, iSCSI), distributed storage (e.g., GlusterFS, Ceph), and more. Each storage type offers distinct advantages, such as performance, scalability, and redundancy, catering to different use cases and requirements.

Storage Pools: Storage pools serve as logical containers within Proxmox VE for organizing and managing storage resources. Administrators can create storage pools comprising one or more physical storage devices or storage arrays. These pools provide a centralized location for storing virtual machine images, templates, ISO files, and other data, facilitating efficient storage management.

Storage Plugins: Proxmox VE features various storage plugins, enabling integration with third-party storage solutions and technologies. These plugins extend Proxmox VE’s capabilities by offering additional features like backup and replication, snapshot management, and advanced storage functionalities. They enhance flexibility and enable seamless integration with existing storage infrastructures.

Storage Library Package (libpve-storage-perl):

The Storage Library package, represented by the libpve-storage-perl package in Proxmox VE, enhances storage management capabilities by providing additional features and tools:

Backup and Restore: The libpve-storage-perl package includes tools for performing backup and restore operations on virtual machine data and configurations. Administrators can schedule backups, perform full or incremental backups, and restore virtual machines from backup files, ensuring data protection and disaster recovery readiness.

Snapshot Management: This package offers functionalities for managing snapshots of virtual machine disks. Administrators can create, manage, and revert to snapshots of virtual machine disks, allowing for efficient backup strategies and providing a mechanism for capturing and restoring virtual machine states at specific points in time.

Storage Migration: libpve-storage-perl provides tools for migrating storage resources between different storage types or storage pools seamlessly. Administrators can transfer virtual machine disks, templates, and other data between storage devices or storage pools without disrupting virtual machine operations, enabling storage optimization and resource utilization.

Monitoring and Reporting: The package offers monitoring and reporting capabilities for storage resources within Proxmox VE. Administrators can monitor storage usage, performance metrics, and health status and generate reports to analyze storage-related trends and issues, facilitating proactive management and optimization of storage resources.

Storage Types

File-level storage

File-level-based storage technologies allow access to a fully featured (POSIX) file system. They are, in general, more flexible than any block-level storage (see below) and allow you to store content of any type. ZFS is probably the most advanced system, and it has full support for snapshots and clones.

Block-level storage

Allows for the storage of large raw images. It is usually not possible to store other files (ISO, backups, etc.) on such storage types. Most modern block-level storage implementations support snapshots and clones. RADOS and GlusterFS are distributed systems, replicating storage data to different nodes.

ZFS (Zettabyte File System):

ZFS is a powerful file system and volume manager known for its data integrity features, snapshots, and RAID-like capabilities.

It’s suitable for local storage on Proxmox VE hosts or as networked storage over iSCSI.

Directory:

Directory storage involves using a directory on the local filesystem or network for storing virtual machine data.

It’s a simple storage type but lacks advanced features like redundancy or snapshotting.

Btrfs (B-tree File System):

Btrfs is a modern file system with features such as snapshots, copy-on-write, and checksumming.

It’s suitable for both local and networked storage solutions, offering advanced data protection and management capabilities.

NFS (Network File System):

NFS allows remote storage to be accessed over a network, providing shared access to files and directories.

It’s commonly used for centralized storage management and sharing among multiple servers.

CIFS (Common Internet File System):

CIFS is a file-sharing protocol used for accessing files and resources over a network.

It’s often used with Windows-based systems and supports features like file sharing, authentication, and access control.

Proxmox Backup:

- Proxmox Backup is a dedicated backup solution for Proxmox VE environments, allowing for the backup and restore of virtual machines, containers, and other data.

iSCSI (Internet Small Computer System Interface):

- iSCSI provides block-level storage access over TCP/IP networks, allowing Proxmox VE hosts to connect to remote storage devices and access storage volumes as if they were locally attached.

GlusterFS:

GlusterFS is a distributed file system that enables the creation of scalable and highly available storage clusters.

It’s suitable for large-scale deployments, offering features like replication, striping, and automatic self-healing.

CephFS:

CephFS is a distributed file system integrated with the Ceph storage platform, providing scalable and reliable storage for virtualized environments.

It supports features like snapshots, data replication, and distributed metadata management.

LVM (Logical Volume Manager):

LVM allows for the management of logical volumes on Linux systems, providing features like volume resizing, snapshots, and RAID-like functionality.

It’s commonly used for local storage management and can be combined with other storage types for added flexibility.

LVM-thin:

- LVM-thin is an extension of LVM that offers thin provisioning capabilities, allowing for more efficient use of storage space by allocating storage on-demand rather than pre-allocating it upfront.

Ceph/RBD (RADOS Block Device):

- Ceph/RBD provides block-level storage access within the Ceph storage platform, offering features like data replication, thin provisioning, and snapshot capabilities.

ZFS over iSCSI:

ZFS volumes can be exported over iSCSI, enabling remote servers to access ZFS storage as block devices.

This setup provides features like data integrity checks, snapshots, and compression to remote servers via the iSCSI protocol.

Each storage type has its own set of features and use cases, allowing administrators to choose the most appropriate solution based on their requirements for performance, scalability, data protection, and management capabilities.

Proxmox Shared Storage

Proxmox VE’s shared storage model allows multiple Proxmox VE nodes to access centralized storage resources, enabling features like live migration, high availability, and data redundancy. The model includes:

Shared Storage Types

Network File System (NFS):

Description: NFS allows sharing files over a network. It enables multiple Proxmox VE hosts to access shared storage resources, providing centralized storage management and sharing.

Capabilities: NFS offers simple setup and configuration, making it suitable for environments where ease of use and flexibility are priorities. However, it may lack advanced features like data redundancy and snapshot capabilities.

Use Cases: NFS is commonly used for centralized storage management, sharing ISO images, and storing virtual machine disk images and templates.

CIFS (Common Internet File System):

Description: CIFS is a file-sharing protocol used for accessing files and resources over a network, primarily associated with Windows-based systems.

Capabilities: CIFS enables Proxmox VE hosts to access shared storage resources hosted on Windows servers or NAS devices. It supports features like file sharing, authentication, and access control.

Use Cases: CIFS is often used in environments where Windows-based storage resources are prevalent, allowing seamless integration with existing infrastructure.

iSCSI (Internet Small Computer System Interface):

Description: iSCSI provides block-level storage access over TCP/IP networks, allowing Proxmox VE hosts to mount remote storage volumes as if they were locally attached.

Capabilities: iSCSI offers features like data integrity checks, error recovery mechanisms, and support for advanced storage functionalities like snapshots and thin provisioning.

Use Cases: iSCSI is commonly used for centralized storage management, virtual machine disk storage, and storage consolidation in data center environments. Suitable for medium- to large-scale deployments, offering high performance and scalability.

GlusterFS

Description: GlusterFS is a distributed file system that enables the creation of scalable and highly available storage clusters.

Capabilities: GlusterFS provides features like replication, striping, and automatic self-healing, making it suitable for large-scale deployments requiring resilience and fault tolerance.

Use Cases: GlusterFS is commonly used for scalable and highly available storage solutions, such as shared storage for virtualized environments and file sharing among multiple servers.

CephFS

Description: CephFS is a distributed file system integrated with the Ceph storage platform, providing scalable and reliable storage for virtualized environments.

Capabilities: CephFS supports features like data replication, data scrubbing, and distributed metadata management, ensuring data integrity and availability. Provides advanced features like automatic data replication, load balancing, and self-healing capabilities.

Distributed storage platform offering scalable object, block, and file storage.Use Cases: CephFS is suitable for environments requiring scalable and reliable storage solutions, such as cloud storage, virtualization, and big data analytics.

File System Functionality

Local Storage: Typically uses the ext4 file system.

Shared Storage: Each shared storage type may have its own preferred file system, such as XFS for GlusterFS or CephFS for Ceph. However, some shared storage types can work with multiple file systems, providing flexibility based on requirements.

File-level storage: ZFS, Directory, BTRFS, NFS, CIFS, Proxmox Backup,

GlusterFS, CephFS.Block-level storage: ZFS, Proxmox Backup, LVM, LVM-thin, iSCSI, Ceph, ZFS over iSCSI.

On file-based storages, snapshots are possible with the qcow2 format.

It is possible to use LVM on top of iSCSI or FC-based storage. That way, we get shared LVM storage.

Image Format

The choice of a storage type will determine the format of the hard disk image. Storages which present block devices (LVM, ZFS, and Ceph) will require the raw disk image format, whereas file-based storages (Ext4, NFS, CIFS, and GlusterFS) will let you choose either the raw disk image format or the QEMU image format.

the QEMU image format is a copy-on-write format which allows snapshots and thin provisioning of the disk image.

the raw disk image is a bit-to-bit image of a hard disk, similar to what you would get when executing the dd command on a block device in Linux. This format does not support thin provisioning or snapshots by itself, requiring cooperation from the storage layer for these tasks. It may, however, be up to 10% faster than the QEMU image format.

the VMware image format only makes sense if you intend to import/export the disk image to other hypervisors.

Architecture

Storage Devices: Various storage solutions are connected to Proxmox VE nodes, providing centralized storage resources.

Proxmox VE Nodes: Physical servers running Proxmox VE, accessing shared storage resources.

Cluster Integration: Proxmox VE nodes are clustered together using technologies like Corosync, allowing for communication and coordination between nodes and ensuring high availability and reliability.

NFS: Utilizes dedicated NFS servers accessed by Proxmox VE nodes over the network.

iSCSI: Involves one or more iSCSI targets (storage servers) providing block-level storage to Proxmox VE nodes.

GlusterFS: Consists of multiple servers grouped together in a cluster, with Proxmox VE nodes connecting to the GlusterFS cluster.

Ceph: Employs a distributed architecture with multiple OSD nodes, monitors, and metadata servers, interacted with by Proxmox VE nodes.

Recommended Hardware and Software

Hardware Recommendations

For NFS and iSCSI: High-performance network infrastructure (1 Gbps or 10 Gbps Ethernet), storage servers with ample disk space and RAID configurations for redundancy.

For GlusterFS and Ceph: Robust server hardware with multiple disks for storage, fast networking infrastructure, and sufficient CPU and RAM resources to handle cluster operations.

Software Recommendations

Ensure compatibility between Proxmox VE versions and the chosen shared storage solutions.

Regularly update Proxmox VE and shared storage software to benefit from performance improvements, bug fixes, and security patches.

Implement monitoring and management tools to monitor storage performance, health, and capacity utilization.

Selecting the appropriate shared storage type and file system depends on factors such as performance requirements, scalability, redundancy, and specific use cases. It’s essential to evaluate these factors carefully to ensure the chosen solution aligns with your organization’s needs and infrastructure requirements.

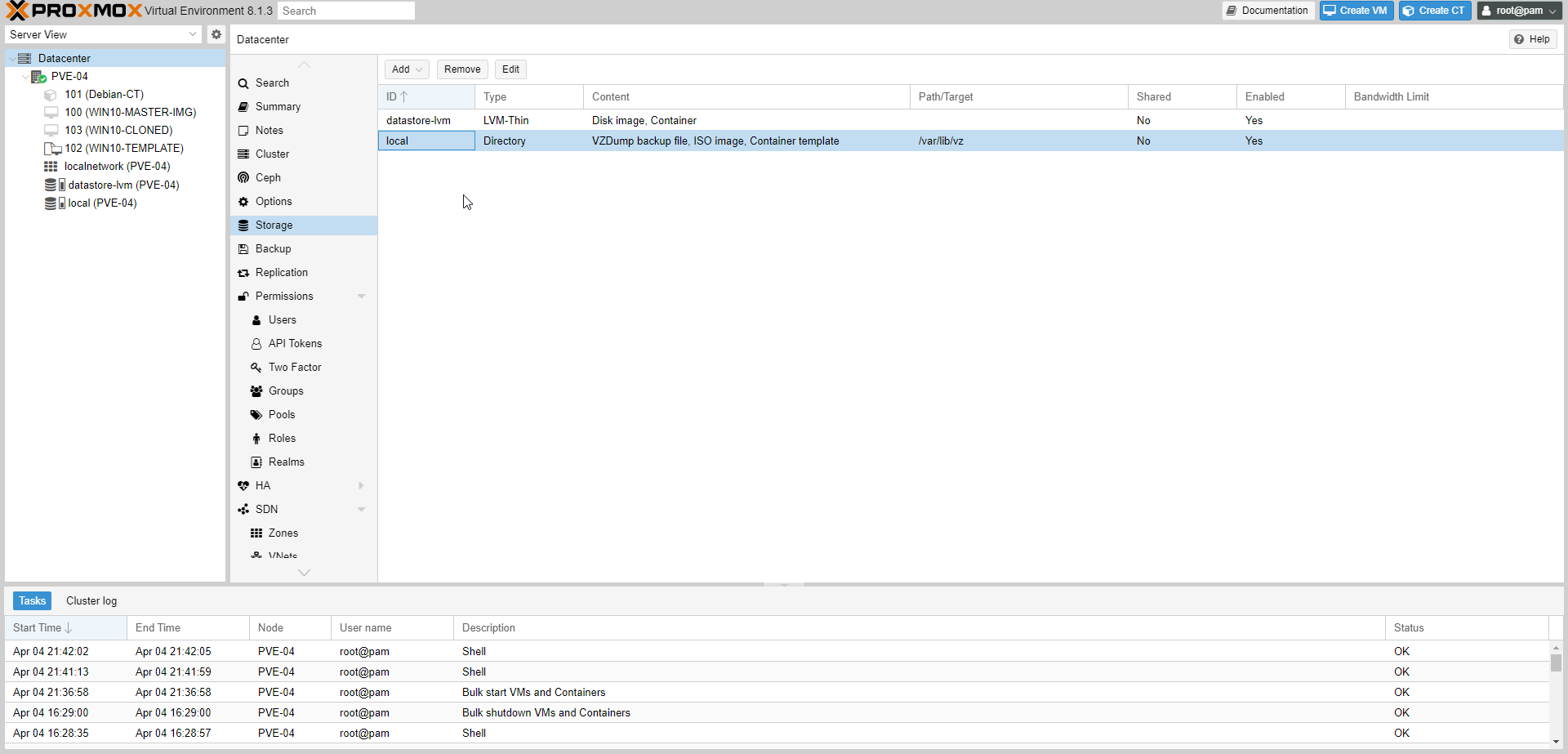

Storage Demonstration

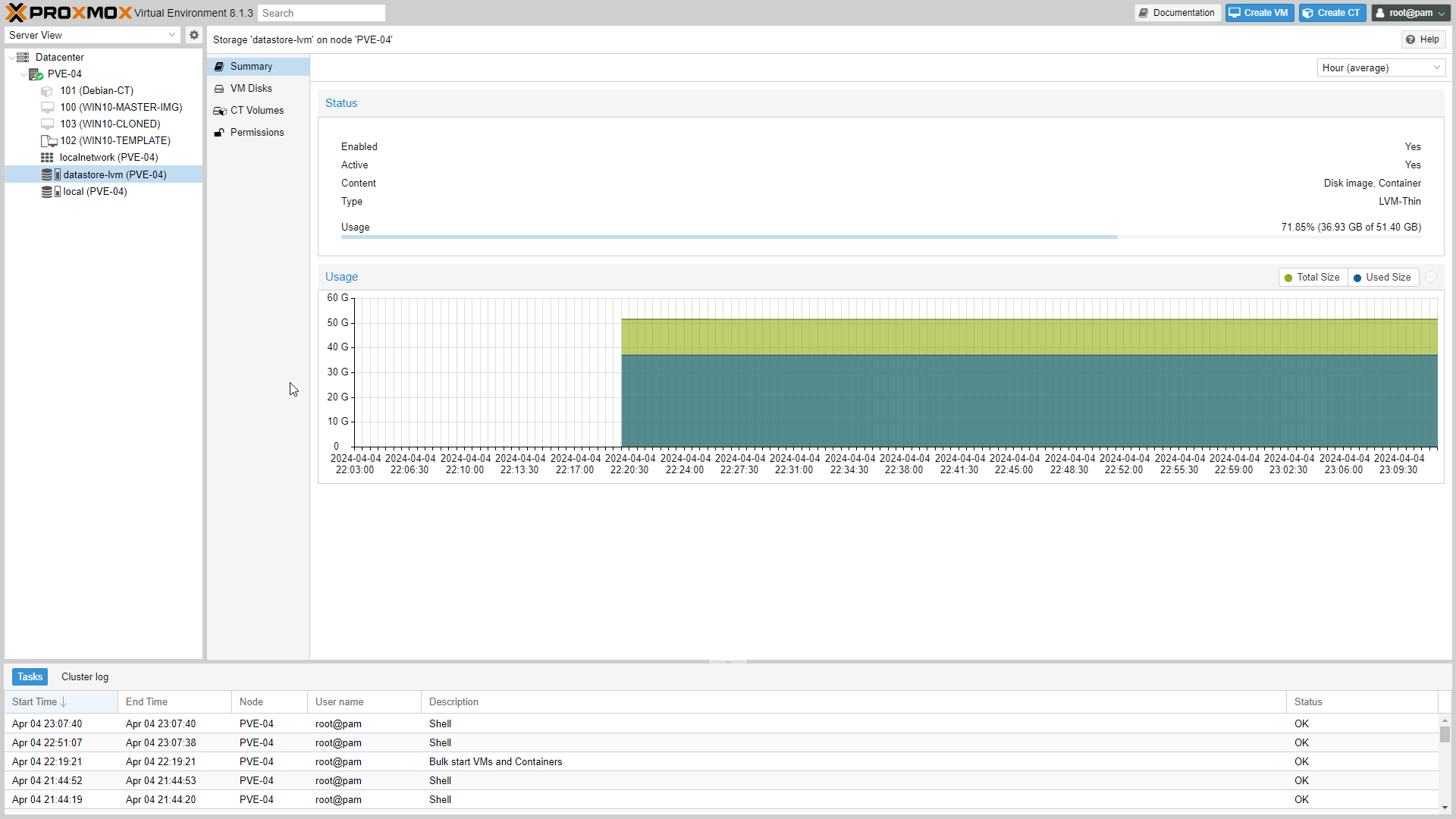

We will demonstrate the storage types of Proxmox Virtual Environment. We’ve already created storage named “local” as Directory type and storage named “datastore-lvm” as LVM-Thin (Logical Volume Manager) type in the previous tutorial, Proxmox Virtual Environment Post-Installation and Configuration-Part 02.

Directory

A directory is file-level storage, so you can store any type of content, like virtual disk images, containers, templates, ISO images or backup files.

We can mount additional storages via standard linux /etc/fstab and then define a directory storage for that mount point. This way, you can use any file system supported by Linux.

Access the Proxmox VE web interface from browser by entering the server’s IP address in a web browser.

Select Datacenter from the resource tree on the left.

Select Storage from the content panel.

At the storage interface, we can manage (Add, Remove, and edit) Storage.

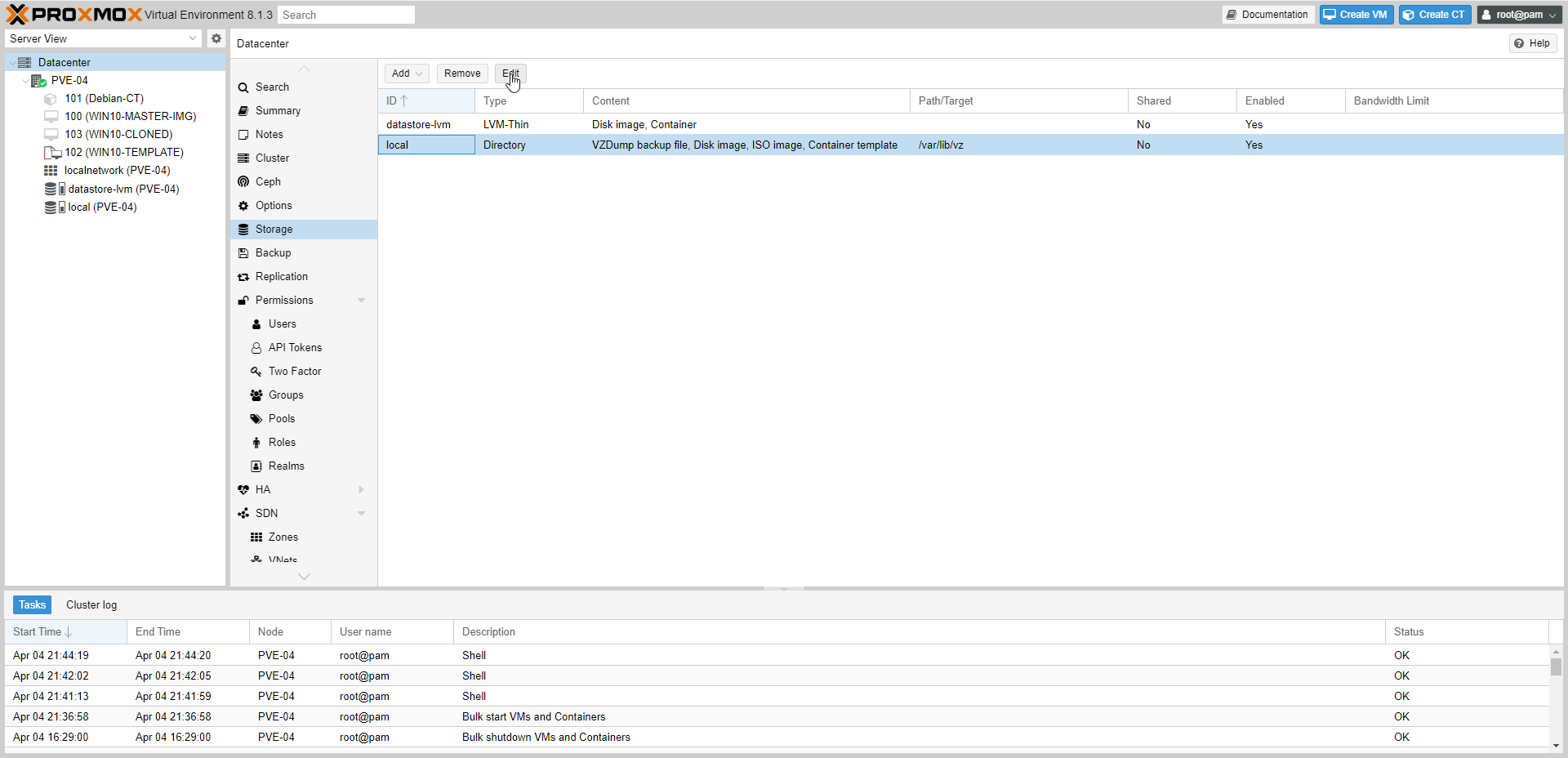

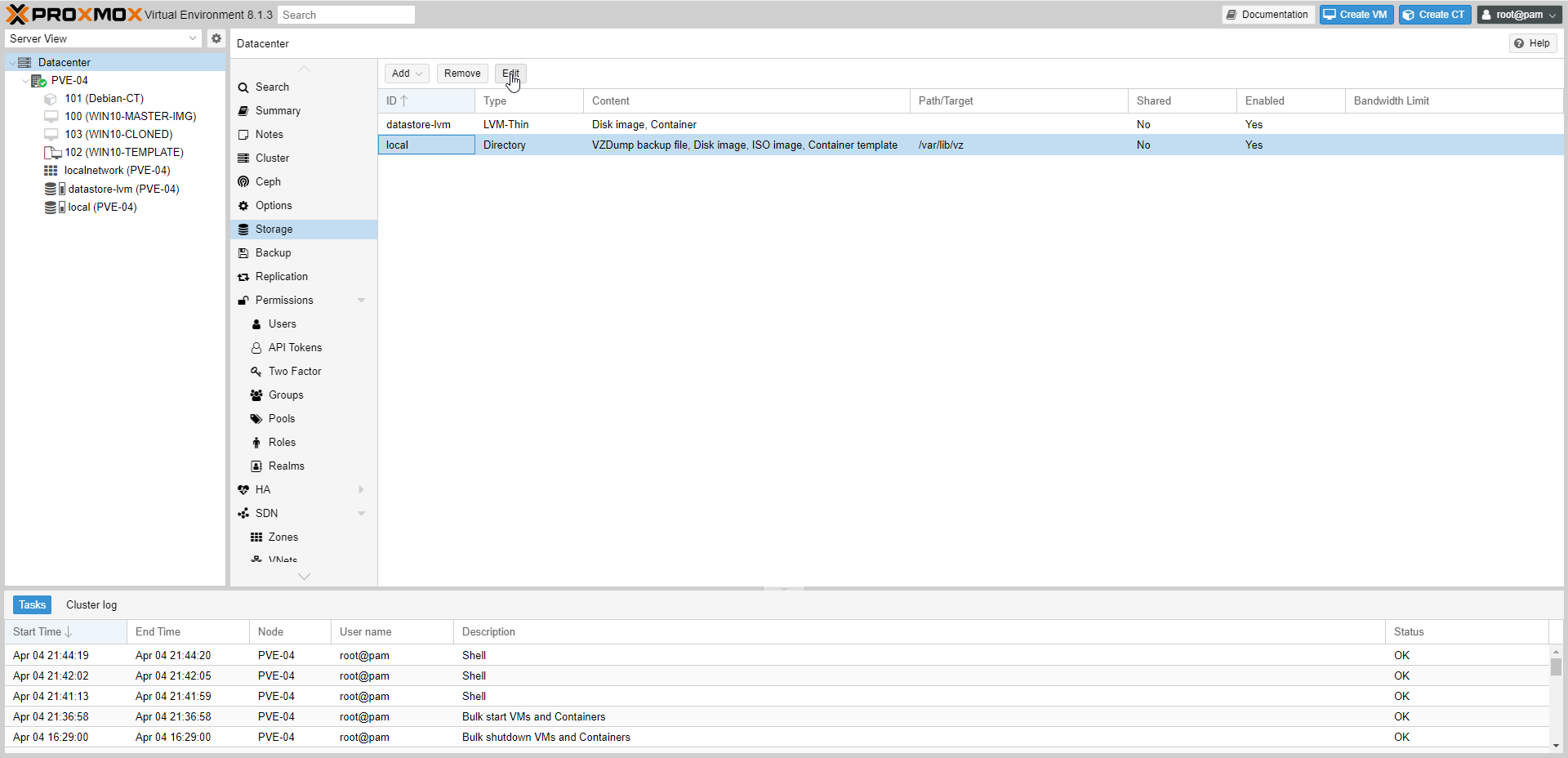

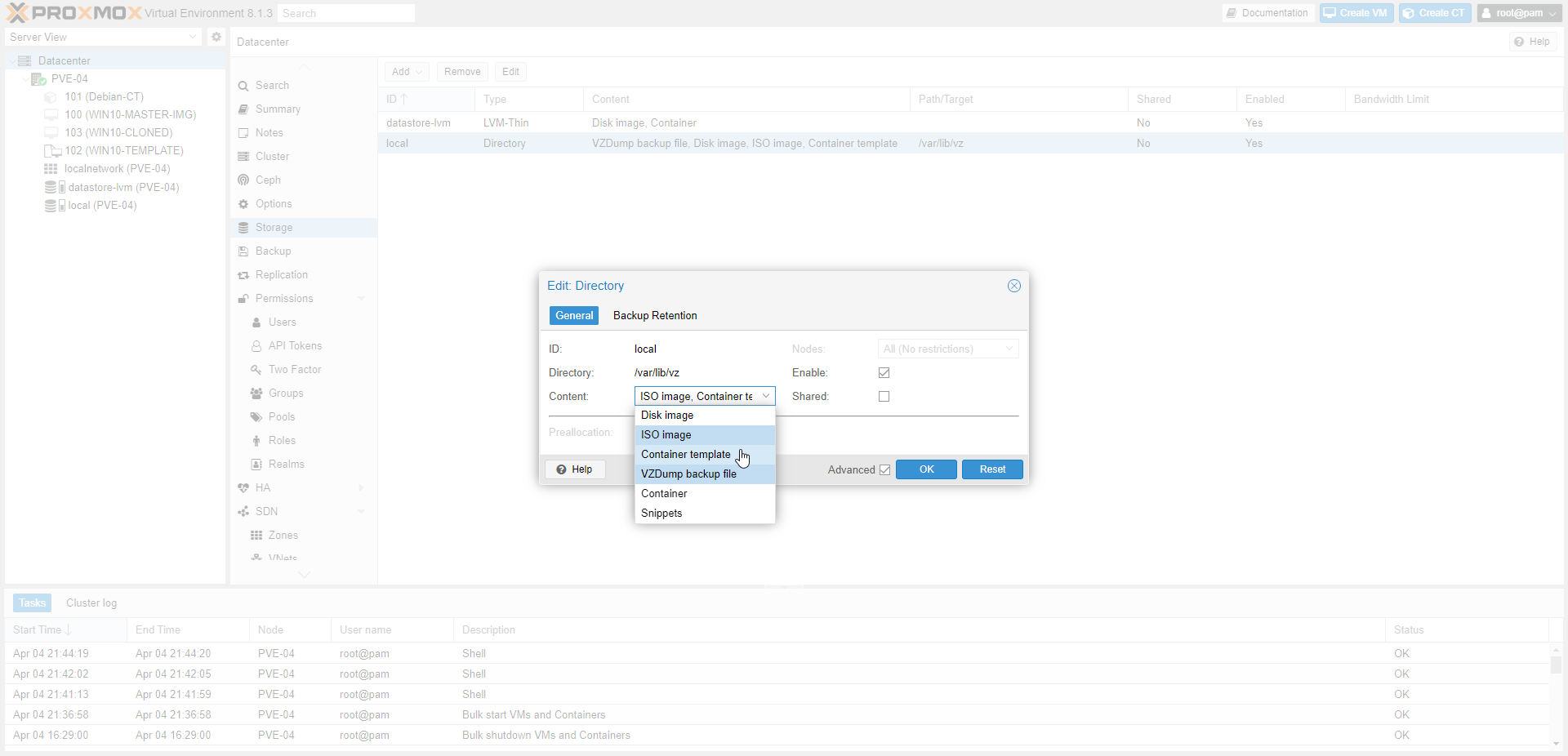

Select “local” storage to explore the contents.

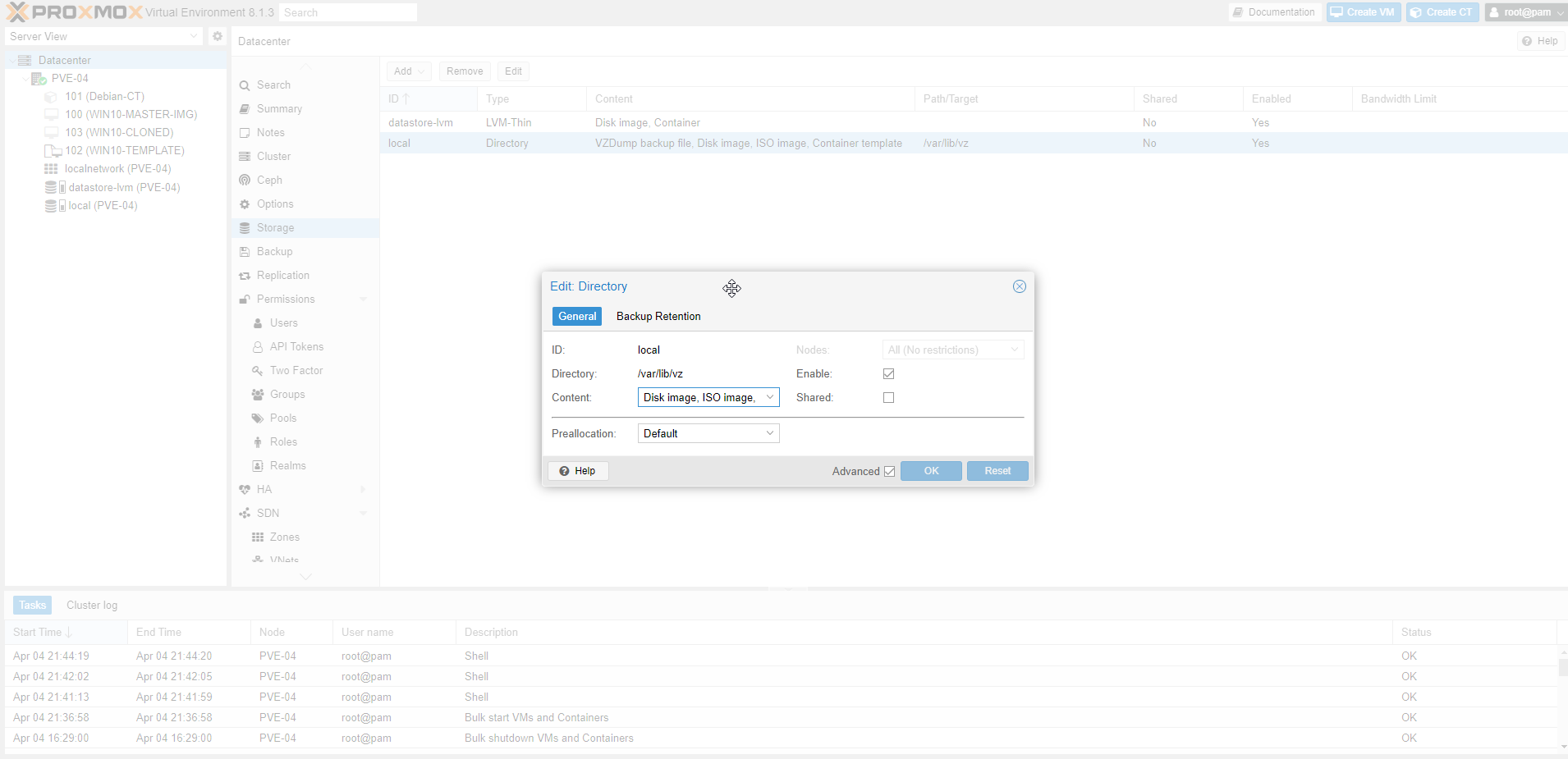

- Select Edit to edit Directory.

- Select Edit to edit Directory.

ID: is the name of the Directory.

Directory: /var/lib/vz is the path property for the Directory. This needs to be an absolute file system path.

Content: is the type of content that can be selected from the drop-down menu.

Nodes: From the drop-down menu of Nodes, we can select which node has access to the directory.

Shared: to enable or disable if this directory can be shared between multiple nodes.

Select the drop-down menu to explore content.

The disk image content type enables local storage to hold virtual machine disks.

ISO image content types enable local storage to hold and upload ISO images to create virtual machines.

Container template content type enables local storage to hold LXC container templates or images.

The VZDump backup file content type enables local storage to take backups of the virtual machines and containers.

Container content type enables local storage to hold LXC disks.

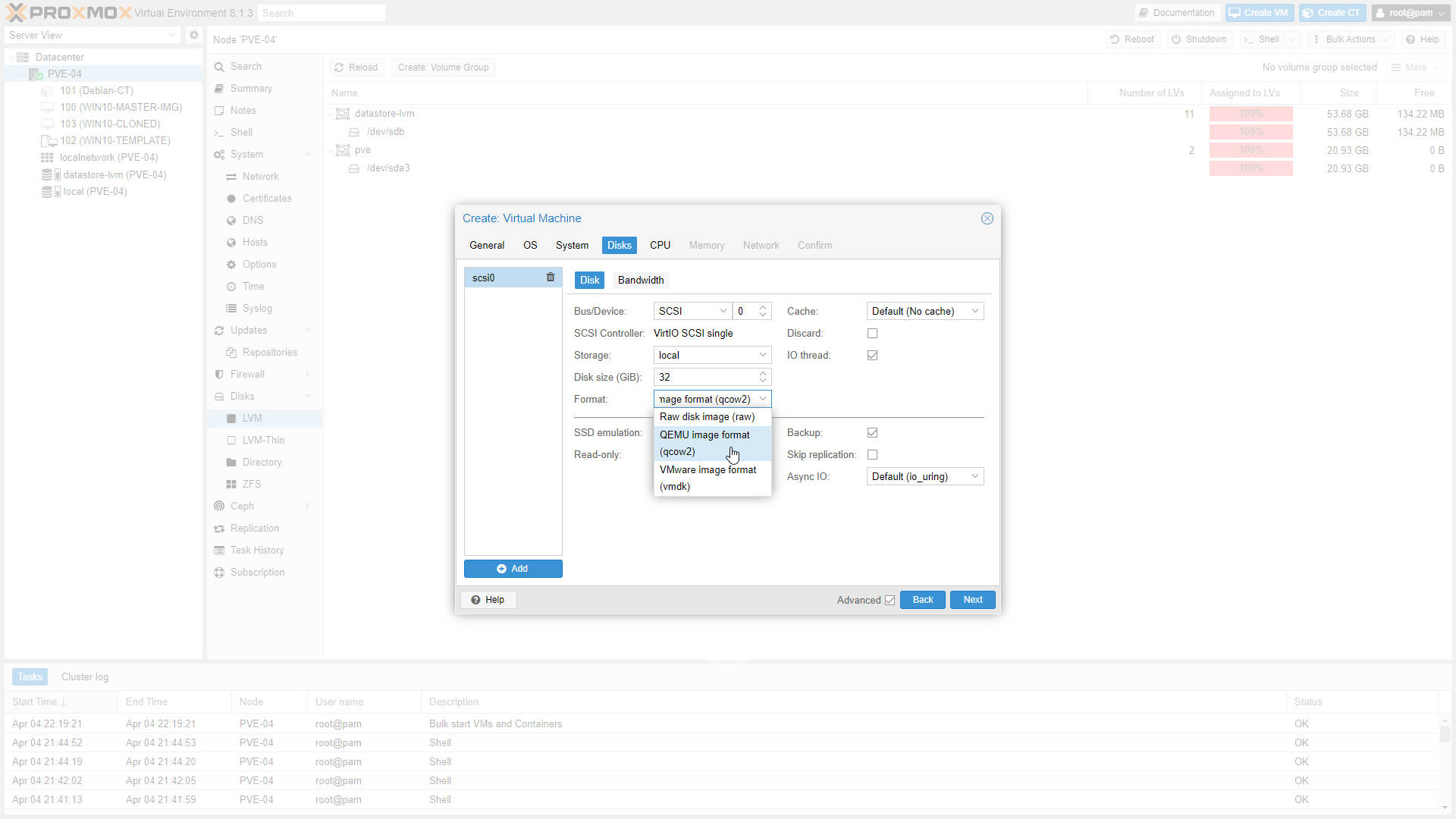

When creating a VM and choose Storage as local as shown above, we can select different image formats: Raw disk image (raw), QEMU image format (qcow2) and VMware image format (vmdk) Since the local storage has the type of directory, we can choose which image format to work with. On file-based storages, snapshots are possible with the qcow2 format.

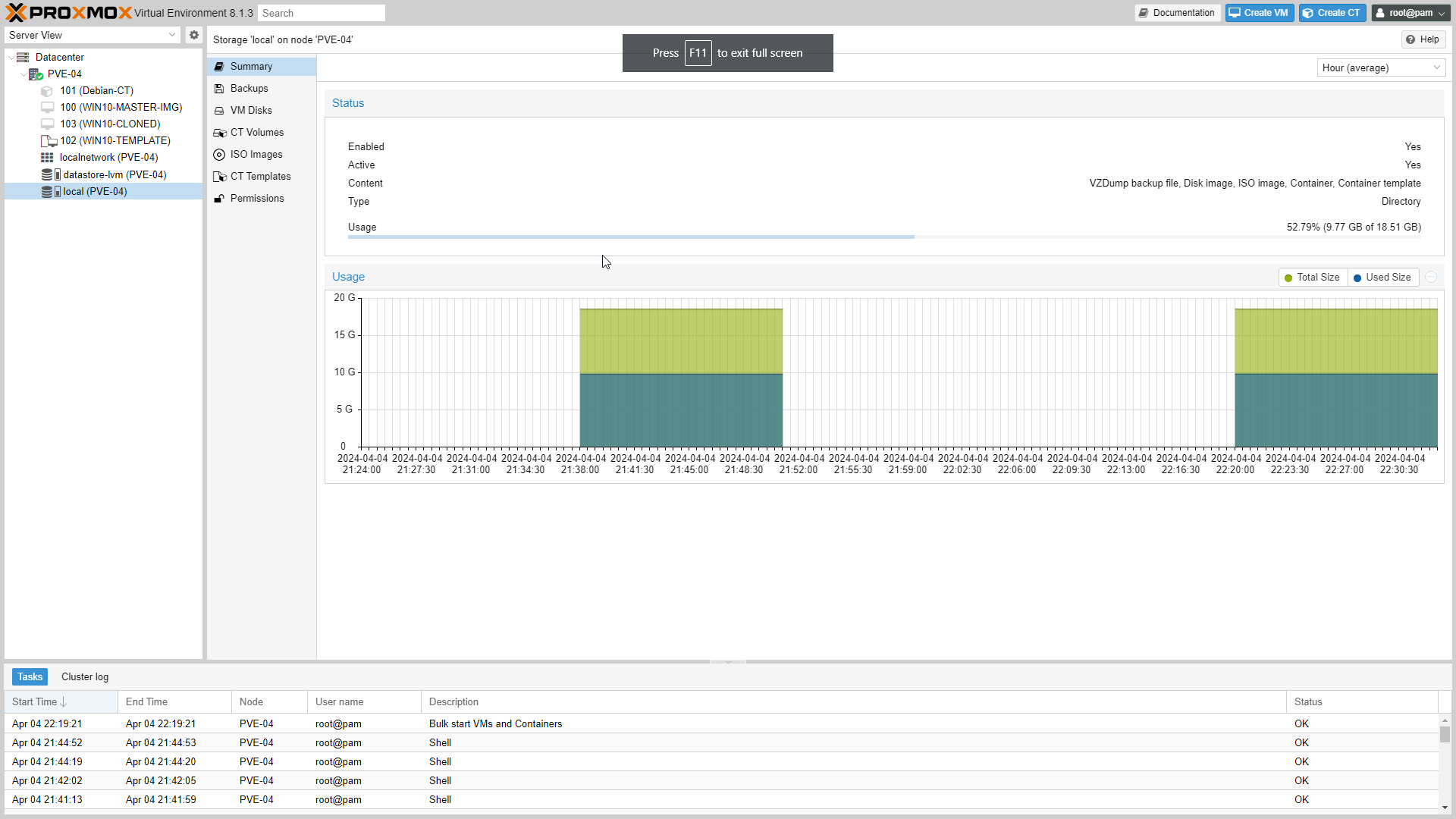

Select on the local (PVE-04) from the resource tree.

we can see all the content types from the Datacenter storage in the content panel: Summary of the storage, Backups, VM Disks, CT Volumes, ISO images and CT Templates.

The Proxmox VE installation CD offers several options for local disk management, and the current default setup uses LVM. The installer lets you select a single disk for such setup and uses that disk as physical volume for the Volume Group (VG) pve.

“local” or “pve-root” is a LV formatted and used as your root filesystem.

LVM and LVM-Thin

LVM stands for Logical Volume Manager, which is a storage management solution for Linux systems. It allows administrators to manage disk drives and storage in a more flexible and efficient manner compared to traditional partitioning.

LVM normally allocates blocks when you create a volume. LVM thin pools instead allocates blocks when they are written. This behavior is called thin-provisioning because volumes can be much larger than physically available space.

LVM thin is block storage but fully supports snapshots and clones efficiently. New volumes are automatically initialized with zero.

LVM thin pools cannot be shared across multiple nodes, so you can only use them as local storage.

Here’s an overview of the architecture of LVM:

Physical Volumes (PV):

These are the physical storage devices such as hard disk drives (HDDs), solid-state drives (SSDs), or partitions on those devices.

LVM organizes physical storage into physical volumes. Each physical volume typically corresponds to a disk or a disk partition.

Volume Groups (VG):

Physical volumes are grouped into volume groups.

A volume group collects one or more physical volumes into a single administrative unit.

Volume groups represent the pool of storage from which logical volumes are created.

Logical Volumes (LV):

Logical volumes are created within volume groups.

These logical volumes act as virtual partitions that can be formatted with a filesystem and mounted, just like physical partitions.

Logical volumes can span multiple physical volumes within the same volume group, providing flexibility and scalability.

Logical volumes are the entities that users and applications interact with at the filesystem level.

Filesystems:

Filesystems can be created on top of logical volumes, providing a way to organize and store data in a structured manner.

Common filesystems like ext4, XFS, and others can be used with LVM.

Metadata:

LVM maintains metadata about physical volumes, volume groups, and logical volumes.

This metadata includes information such as the location of physical extents, mapping between logical and physical extents, volume group properties, etc.

LVM metadata is stored on the physical volumes and allows for the dynamic management of storage resources.

Commands and Tools:

LVM provides a set of command-line tools such as

pvcreate,vgcreate,lvcreate,lvextend,lvresize, etc., for managing physical volumes, volume groups, and logical volumes.These tools allow administrators to create, extend, resize, and manage logical volumes dynamically without the need to repartition disks or disrupt data access.

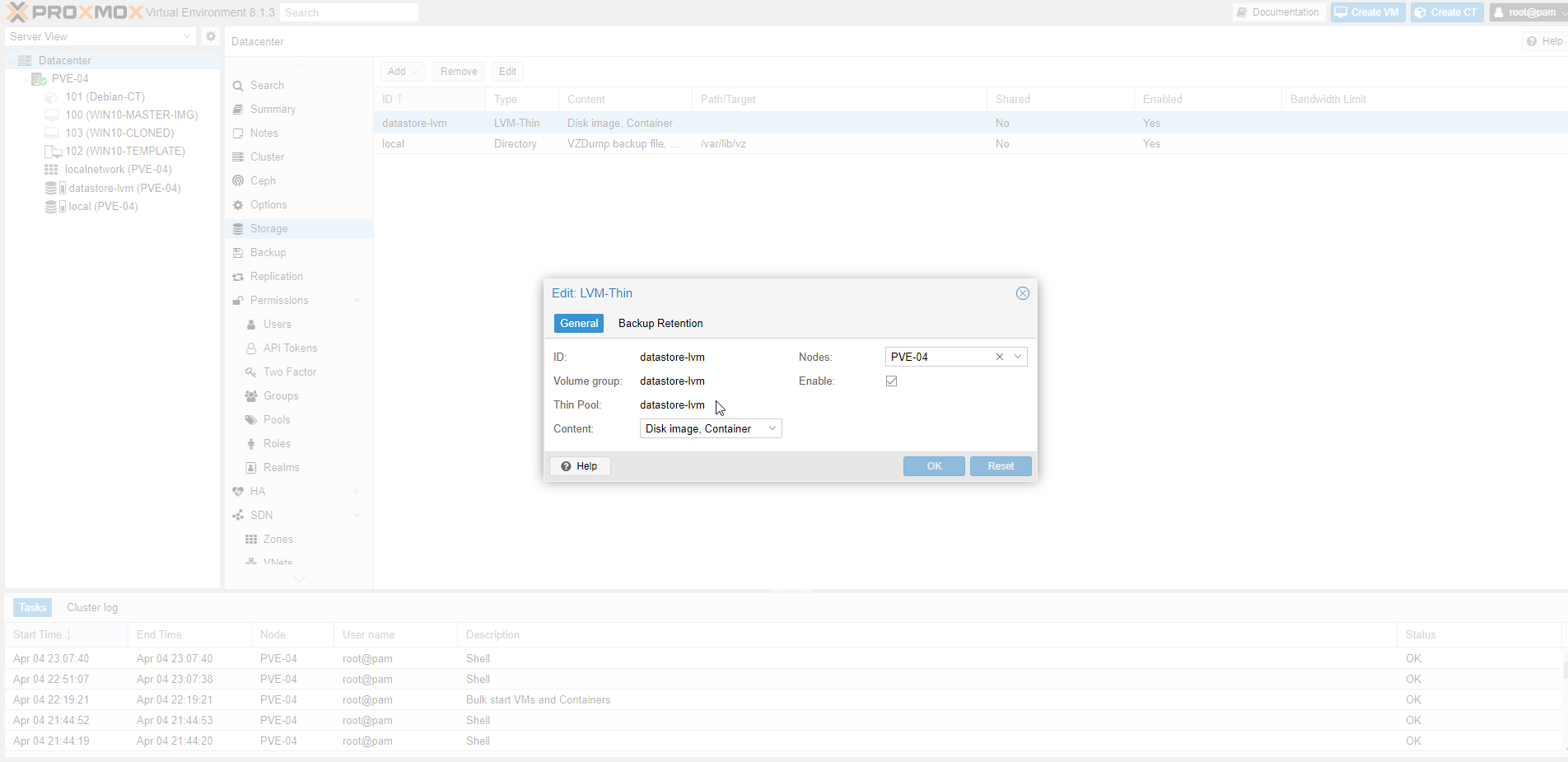

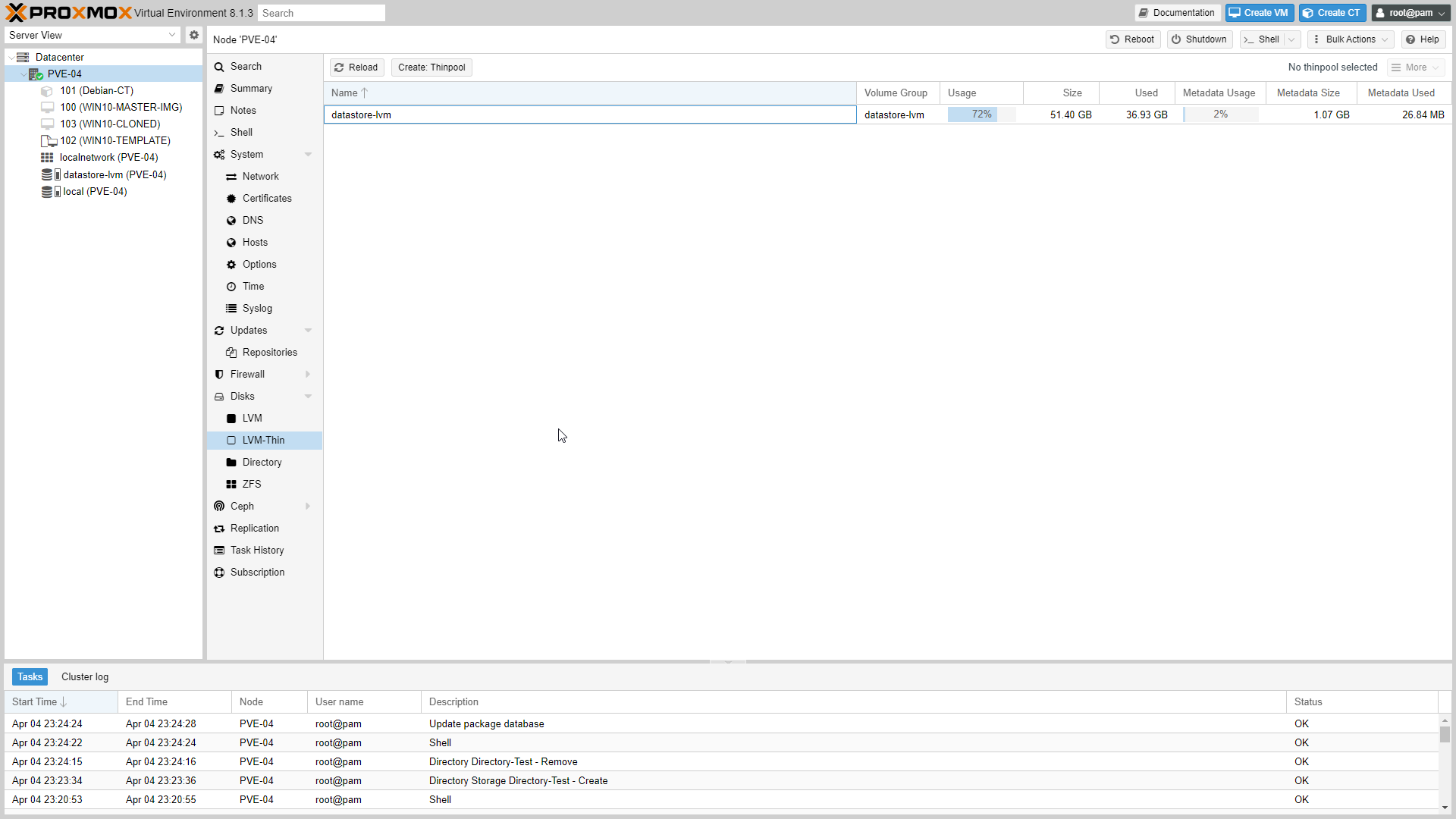

The image above shows the datastore-lvm storage LVM-Thin type.

LVM-Thin can only hold disk images and containers.

LVM-Thin only supports raw image formats.

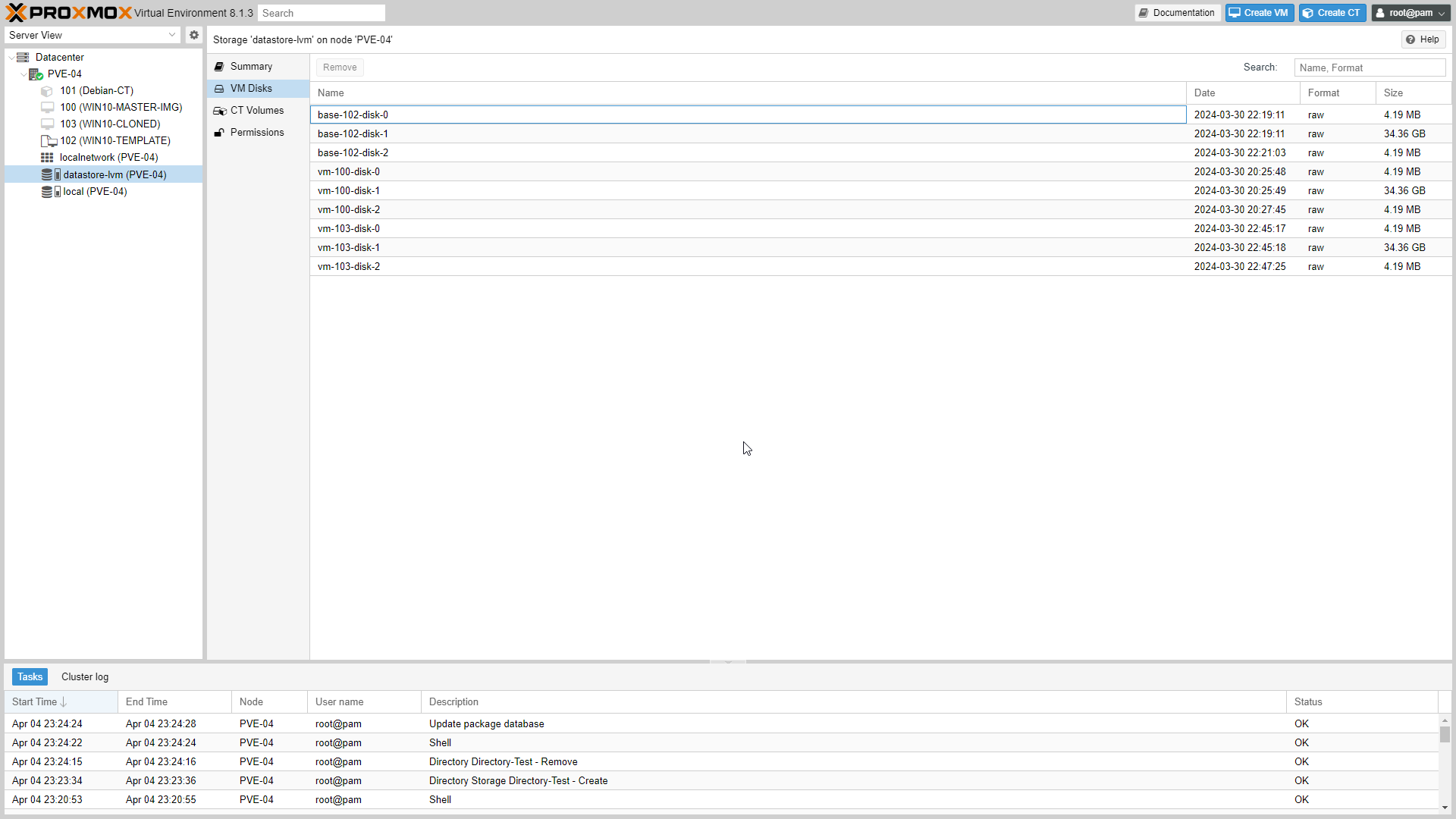

- The image above shows the content panel of the datastore-lvm.

Select VM Disks.

VM Disks lists all the virtual machine disks stored in datastore-lvm with their name, date, format, and size.

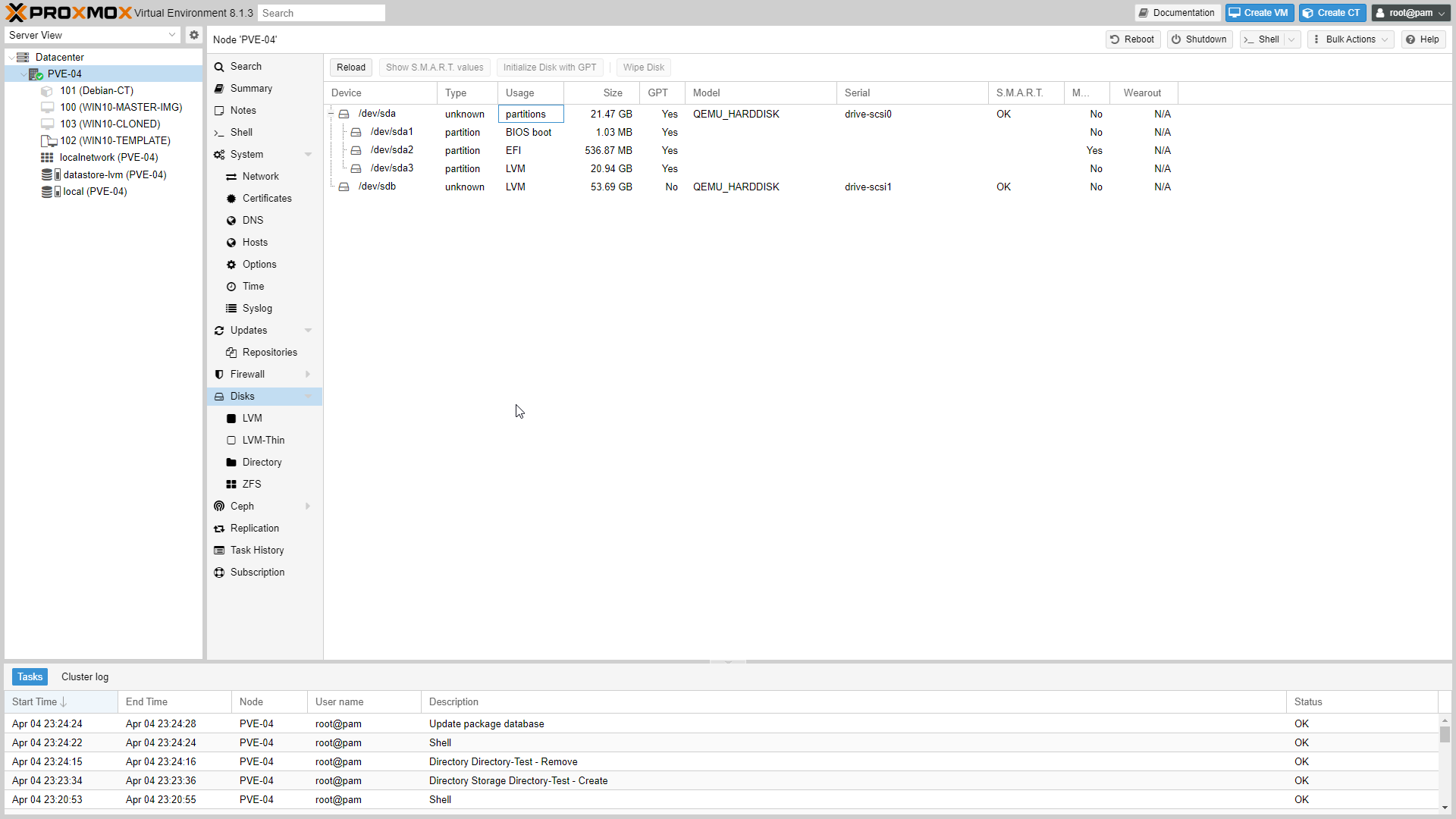

When we select Disks from the content panel of the PVE-04, we can see the local disks installed.

We have 2 disks, dev/sda and dev/sdb, with their partitions.

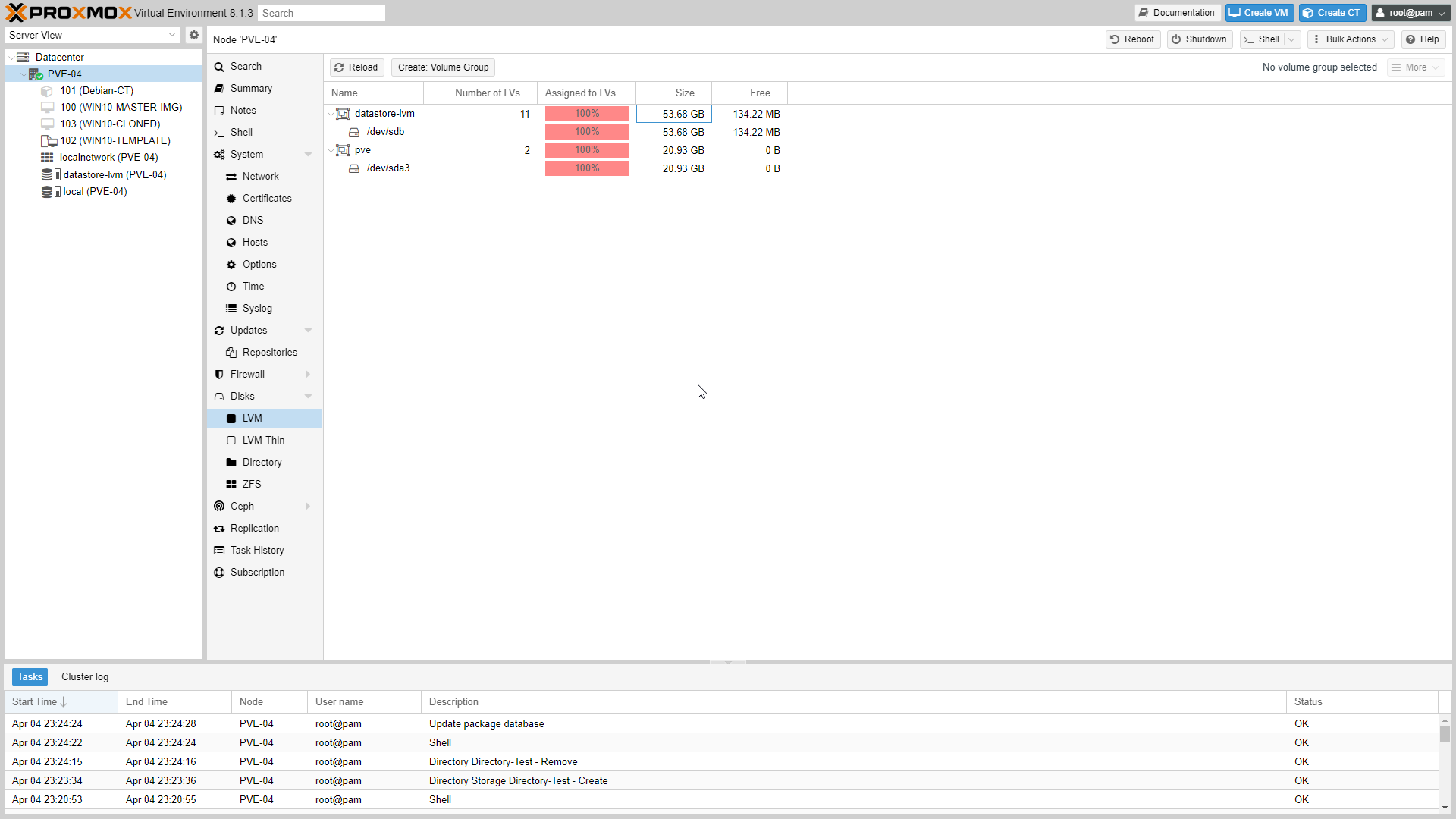

Under disks, we see LVM.

We can see the volume groups created and the number of logical groups (LVs).

“local” or “pve” is a LV formed and used as your root filesystem.

datastore-lvm is LV used as a LVM-Thin pool.

Under LVM, we have LVM-thin.

LVM-Thin holds the Thinpool created, name, volume group, and usage percentage.

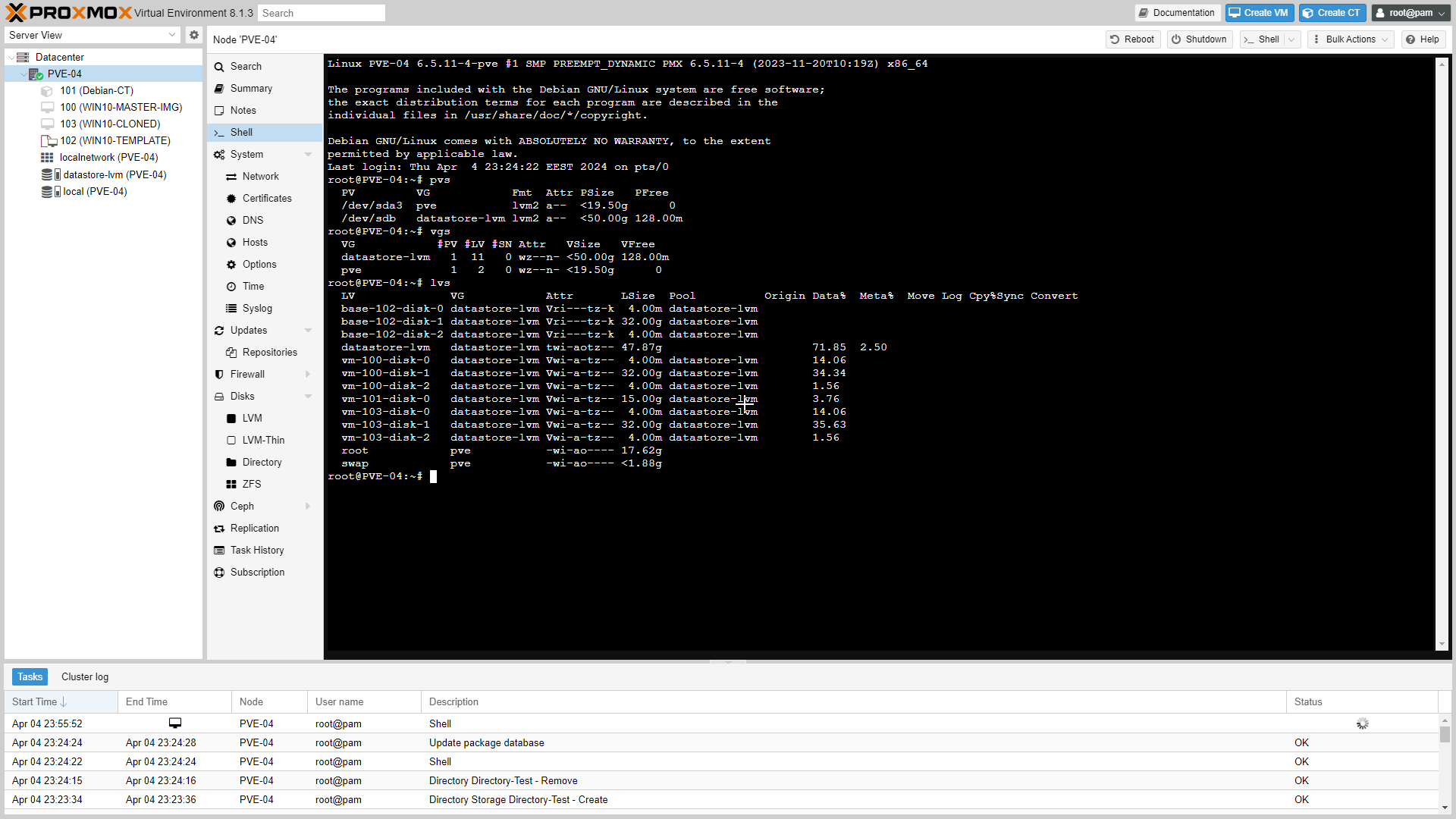

- The image above shows the LVM commands executed from Shell.

pvs #Displays information about physical volumes.

vgs #Displays information about volume groups.

lvs #Displays informaiton about logical volumes.

pvdisplay #Displays detailed information about the physical volumes.

vgdisplay #Displays detailed information about volume groups.

lvdisplay #Displays detailed information about logical volumes.

References

Subscribe to my newsletter

Read articles from Rony Hanna directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Rony Hanna

Rony Hanna

Results-oriented IT professional in various fields of IT, including Systems, Networking, File Systems, Security, Programming Languages, Virtualization, Storage, and Backups.