HTTP Fundamentals: Understanding Undici and its Working Mechanism

Matteo Collina

Matteo Collina

Navigating through Node.js http/https can be a daunting task that often calls for updates. This is particularly evident in how it uses the same API for both client and server functionalities while intricately tying connection pooling with the public API.

A significant hurdle arises when considering modifications to http/https without disrupting frameworks like Express, which relies on the internal workings of base classes, thereby altering the prototype.

This is where Undici, a modern HTTP client library for Node.js, steps in to address these challenges. In this article, we delve into Undici's purpose and its operational mechanisms.

Why Undici.request?

Undici.request is designed to optimize application performance without compromising developer experience. It is highly configurable and harnesses the power of Node.js streams, while facilitating efficient HTTP/1.1 pipelining. By prioritizing speed and flexibility, Undici.request ensures applications run seamlessly.

Why Undici.fetch?

Undici.fetch boasts an impressive 88% passing rate in the WPT tests, making it nearly compliant with the fetch standard. Leveraging WHATWG web streams, it enables isomorphic code usage, making it an ideal choice for cross-platform development. Moreover, by decoupling the protocol from the API and offering support for HTTP/2, Undici.fetch offers versatility and performance enhancements, including HTTP/1.1 pipelining.

Is overhead a discriminating factor?

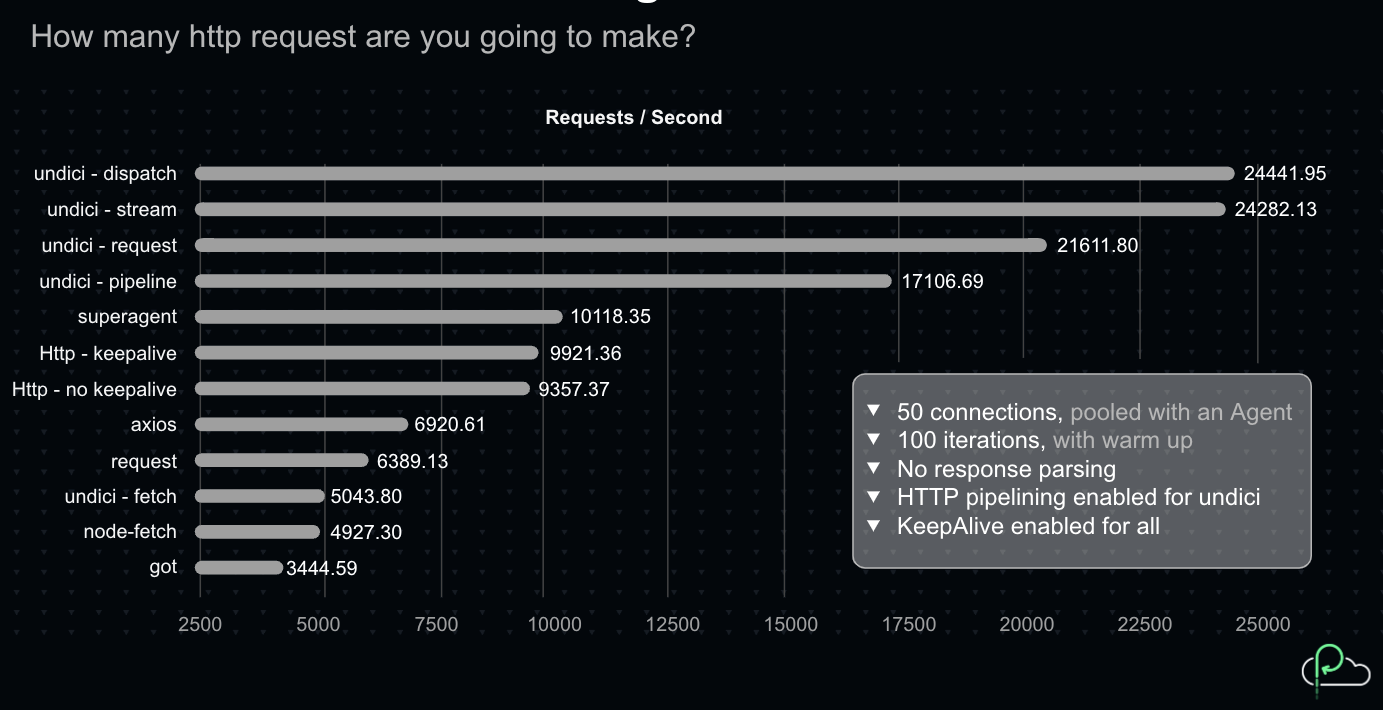

Despite being integrated into the Node core, Undici.fetch outperforms its counterparts in terms of speed and efficiency. While Undici's fetch version may not be the fastest, its overall performance exceeds other options available in the Node.js ecosystem.

As seen above, undici-fetch can process up to 5043.80 requests per second!

Undici’s Working Mechanisms

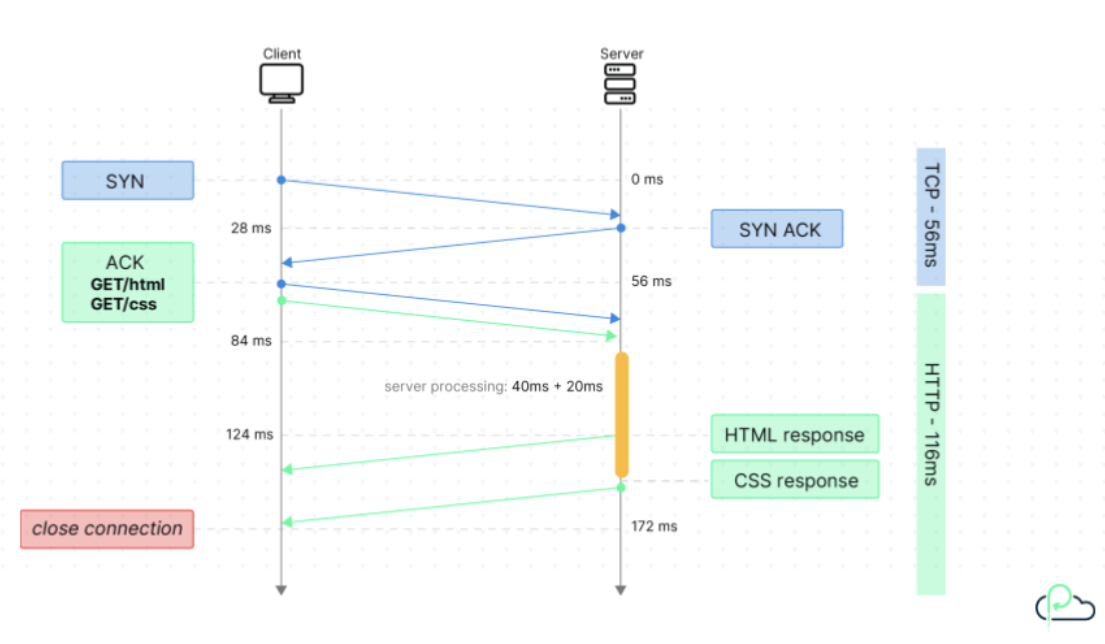

Normal HTTP

In traditional HTTP transactions, the process unfolds as follows: when a TCP request is initiated between a client and a server, and the client requests a resource, typically referred to as a "blob," the server promptly responds to this request within a timeframe of less than 50 milliseconds. This response time encompasses the round trip duration plus the time allocated for server-side processing.

Following this interaction, the server awaits further requests, processing them sequentially in accordance with the typical flow observed in browsers and HTTP clients built on Node.js's node-core framework. However, this sequential processing approach presents a notable challenge: the sockets remain inactive during idle periods, leading to potential inefficiencies in resource utilization.

HTTP/1.1 Pipelining in Action

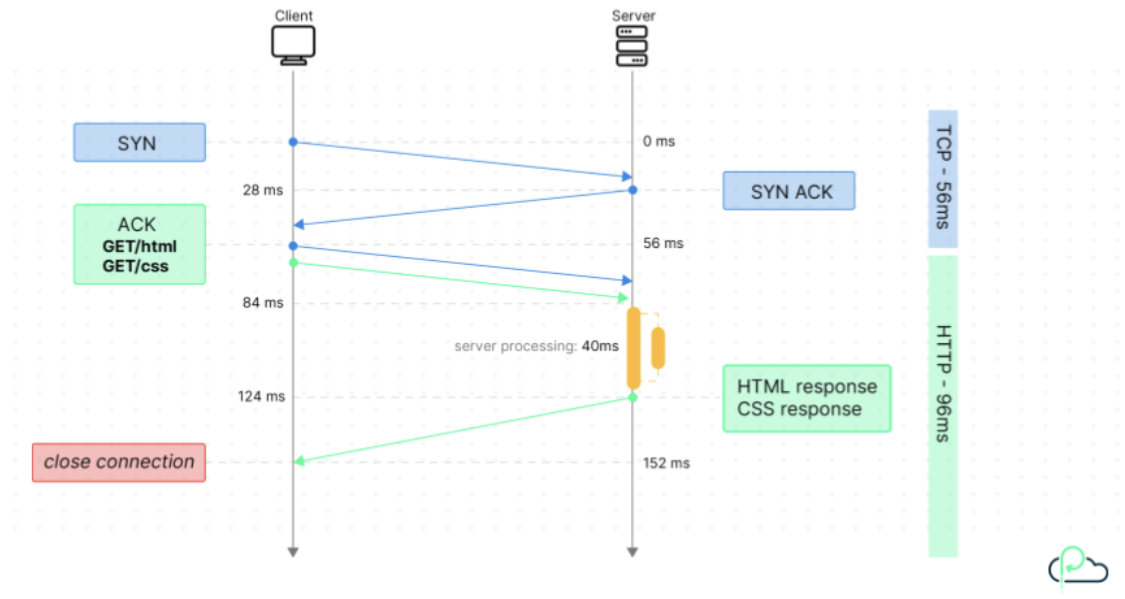

Pipelining operates like a bulk request application, enabling applications to stack multiple HTTP requests upon each other. Subsequently, the server responds to these requests in sequential order.

In the image above, we can see how an HTTP call between a client and server goes. The client can request a blob of HTML or any API call and the server will send a response in JSON, or other predefined data display format, within 20 to 40 milliseconds.

The key advantage of pipelining is demonstrated here. The client immediately sends a second request instead of waiting for the server’s response to the first request.

This is visualized in the image above as two GET requests are sent consecutively before receiving any response.

By using pipelining, the process of receiving numerous responses swiftly via the same socket is facilitated. This not only reduces the reliance on additional sockets, but also optimizes their utilization, thereby expanding the application's TCP window.

Consequently, pipelining presents an efficient solution for those seeking to manage and restrict the number of available sockets effectively.

Strict Serialization of Responses

A potential challenge arises due to the strict serialization of responses, wherein the server adheres to the order of incoming requests.

Should a preceding request encounter prolonged processing time, subsequent requests may face premature termination. This aspect renders such mechanisms less favorable for rendering pipelines in browsers, primarily due to the unpredictable nature of request processing times.

Nevertheless, in Node.js systems, particularly for API calls, leveraging strict response serialization can be beneficial by enhancing socket reusability and fostering improved response times compared to alternative methods.

Consequently, despite its limitations in certain contexts, strict response serialization remains a viable option for optimizing performance in Node.js-based applications.

Undici Design Principles

Undici serves as the cornerstone for Node.js' evolution towards its "http next" iteration. It achieves this by meticulously segregating the APIs utilized by developers from the underlying internal systems that facilitate these APIs.

Moreover, Undici offers support for multiple APIs, each tailored to cater to varying developer experiences and performance profiles. It accommodates HTTP/1.1 and HTTP/2 protocols through a unified API, ensuring compatibility across different standards. In the future, it could be adapted to support HTTP/3.

Its callback-based system is central to Undici's architecture, allowing users to avoid errorback or event-driven mechanisms. This deliberate choice streamlines internal processes while focusing on efficiency and simplicity.

Undici manually manages a connection pool to optimize performance, removes most overhead and memory allocation, and minimizes the transition between native code and JavaScript.

What is a dispatcher?

In Node.js, a dispatcher is created in a module to oversee or track server actions and emitted events within an application. The dispatcher empowers developers to fine-tune essential parameters such as pipeline configurations, keepAlive settings, retries, and the desired number of sockets to be opened for a specific destination.

These configurations play a pivotal role in optimizing the performance and reliability of production systems, thereby circumventing potential issues. Notably, one variant of a dispatcher is known as an agent, which is the key component that oversees the connection pooling.

In Undici, a global dispatcher is set and stored in the process. This global dispatcher can easily be reused. This approach underpins fetch() using the same global dispatcher.

Below is a demo of how to configure a dispatcher in Undici

import { request, setGlobalDispatcher, Agent } from 'undici';

const agent = Agent ({

keepAliveTimeout: 10,

keepAliveMaxTimeout: 10

})

setGlobalDispatcher(agent);

const {

statusCode,

headers,

trailers,

body

} = await request('http://localhost:3000/foo')

console.log('response received', statusCode)

console.log('headers', headers)

console.log('data', await body.json())

console.log('trailers', trailers)

Dispatcher Hierarchy

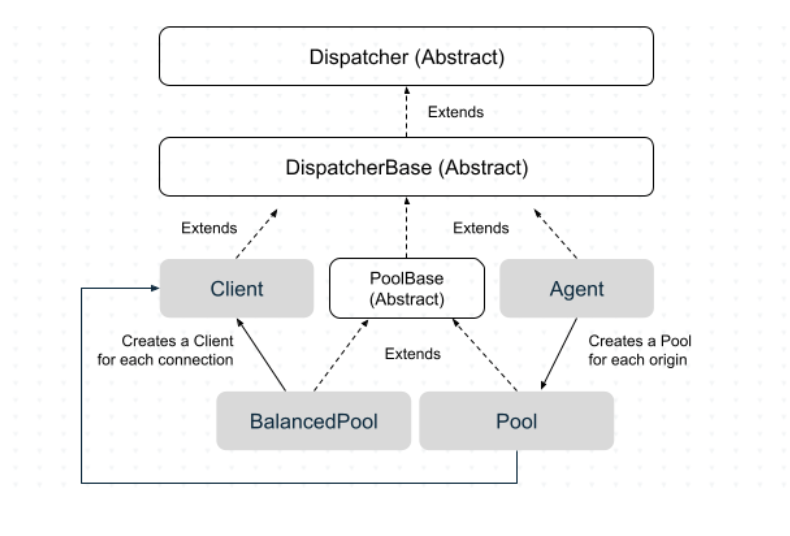

The image above illustrates the dispatcher hierarchy. Let's take a deeper look:

The Dispatcher is the abstract concept at the top of the hierarchy, extending the DispatcherBase.

The Client encompasses core elements and is wrapped into a single socket.

Pools represent multiple sockets, with the BalancedPool allowing developers to do load balancing between multiple peers.

The Agent uses several pools and creates a pool for each called origin, signifying the domain name plus the host and the port.

How to Mock a Request with Unidici

Mocking a request with Undici allows you to simulate a network response, which is mainly for testing purposes. This feature offers some advantages for testing and development.

These advantages include isolated testing, mocking edge cases, and properly handling errors. Undici mocking allows you to test your code’s logic in isolation. This gives you the freedom to create custom responses that trigger specific code paths for proper testing.

Mocking also allows you to simulate rare events that can crash your application when they occur. You can also simulate common error scenarios in your application by defining custom error codes or unexpected data. This allows you to better handle rare events, improving your code’s overall robustness and quality.

import { strict as assert } from "assert";

import { MockAgent, setGlobalDispatcher, fetch } from "undici";

// Create a new mock agent

const mockAgent = new MockAgent();

// Set the mock agent as the global dispatcher

setGlobalDispatcher(mockAgent);

// Provide the base URL to the request

const mockPool = mockAgent.get("http://localhost:3000");

// Intercept the request

mockPool

.intercept({

path: "/bank-transfer",

method: "POST",

headers: {

"X-TOKEN-SECRET": "SuperSecretToken",

},

body: JSON.stringify({

recipient: "1234567890",

amount: "100",

}),

})

.reply(200, {

message: "transaction processed",

});

// using fetch() with the mock agent

async function performRequest() {

const response = await fetch("http://localhost:3000/bank-transfer", {

method: "POST",

headers: {

"Content-Type": "application/json",

"X-TOKEN-SECRET": "SuperSecretToken",

},

body: JSON.stringify({

recipient: "1234567890",

amount: "100",

}),

});

const data = await response.json();

assert.equal(data.message, "transaction processed");

console.log("Request successful:", data.message);

}

performRequest().catch(console.error);

imported assert and Undici

created and set a mockAgent

used localhost as the base URL for the request

intercepted the request with MockPool and sent a reply that the transaction is processed

used

fetch()with the mockAgent to fetch the bank-transfer in the local hosthandled any possible error from the

fetch()request with catch and printed in the console

Handlers

In Node.js, a handler is a function—or module in microservice applications—that processes HTTP requests and responses. So, what is the connection between handlers and dispatchers? Once a dispatcher gets any requests, it directs it to a relevant handler.

Here are some notable dispatchers and what they do:

dispatcher.request()will create the RequestHandlerdispatcher.stream()will create the StreamHandlerdispatcher.fetch()will create the FetchHandlerdispatcher.pipeline()will create the PipelineHandler

Below is what the dispatch handler looks like:

export interface DispatchHandlers {

/** Invoked before request is dispatched on socket. May be invoked multiple times when a request is retried when the request at the head of the pipeline fails. */

onConnect?(abort: () => void): void;

/** Invoked when an error has occurred. */

onError?(err: Error): void;

/** Invoked when request is upgraded either due to a `Upgrade` header or `CONNECT` method. */

onUpgrade?(statusCode: number, headers: Buffer[] | string[] | null, socket: Duplex): void;

/** Invoked when response is received, before headers have been read. **/

onResponseStarted?(): void;

/** Invoked when statusCode and headers have been received. May be invoked multiple times due to 1xx informational headers. */

onHeaders?(statusCode: number, headers: Buffer[] | string[] | null, resume: () => void, statusText: string): boolean;

/** Invoked when response payload data is received. */

onData?(chunk: Buffer): boolean;

/** Invoked when response payload and trailers have been received and the request has completed. */

onComplete?(trailers: string[] | null): void;

/** Invoked when a body chunk is sent to the server. May be invoked multiple times for chunked requests */

onBodySent?(chunkSize: number, totalBytesSent: number): void;

}

There is the onConnect callback that implements the interface when it is called. It has an onError callback for errors. There is also an onUpgrade to handle future upgrades to the package. The onHeaders is called when there is a header. It can be called multiple times when there are multiple status codes.

This interface is responsible for the library’s speed.

Interceptors

An interceptor is a middleware component or mechanism that intercepts and modifies requests and responses as they travel through a system. Interceptors are commonly used in frameworks and libraries to add functionality or to manipulate data before it reaches its destination or agent.

To create an interceptor, you'll need to create a function that takes a dispatch function and returns a new intercepted dispatch. Inside the interceptor, you can modify the request or response as needed.

Here is an example of an interceptor that adds a header to the request:

import { getGlobalDispatcher, request } from "undici"

const insertHeaderInterceptor = dispatch => {

return function InterceptedDispatch(opts, handler) {

opts.headers = {

...opts.headers,

authorization: "Bearer [Some token]"

}

return dispatch(opts, handler)

}

}

const res = await request('http://localhost:3000/', {

dispatcher: getGlobalDispatcher().compose(insertHeaderInterceptor)

})

console.log(res.statusCode);

console.log(await res.body.text());

To modify the response, you can use the DecoratorHandler to intercept the response when it is received. Here is an example of an interceptor that clears the headers from the response:

import { DecoratorHandler, getGlobalDispatcher, request } from "undici"

const clearHeadersInterceptor = dispatch => {

class ResultInterceptor extends DecoratorHandler {

onHeaders (statusCode, headers, resume) {

return super.onHeaders(statusCode, [], resume)

}

}

return function InterceptedDispatch(opts, handler){

return dispatch(opts, new ResultInterceptor(handler))

}

}

const res = await request('http://localhost:3000/', {

dispatcher: getGlobalDispatcher().compose(clearHeadersInterceptor)

})

console.log(res.statusCode);

console.log(res.headers);

console.log(await res.body.text());

Undici has a few built-in interceptors that can be used to change the behavior of the client: retry and redirect.

The retry interceptor will retry the request if it fails:

import { getGlobalDispatcher, interceptors, request } from "undici"

const res = await request('http://localhost:3000/', {

dispatcher: getGlobalDispatcher()

.compose(

interceptors.retry({

maxRetries: 3,

minTimeout: 1000,

maxTimeout: 10000,

timeoutFactor: 2,

retryAfter: true,

})

)

});

console.log(res.statusCode);

console.log(res.headers);

console.log(await res.body.text());

The redirect interceptor will follow the redirect response:

import { getGlobalDispatcher, interceptors, request } from "undici"

const res = await request('http://localhost:3000/', {

dispatcher: getGlobalDispatcher()

.compose(

interceptors.redirect({

maxRedirections: 3,

throwOnMaxRedirects: true,

})

)

});

console.log(res.statusCode);

console.log(res.headers);

console.log(await res.body.text());

Undici allows you to compose multiple interceptors together, allowing you to chain multiple interceptors to create more complex behaviors. The order of the interceptors is important, as they will be executed in the order they are composed.

Below is an example of composing retry and redirect interceptors:

import { getGlobalDispatcher, interceptors, request } from "undici"

const res = await request('http://localhost:3000/', {

dispatcher: getGlobalDispatcher()

.compose(

interceptors.redirect({

maxRedirections: 3,

throwOnMaxRedirects: true,

})

)

.compose(

interceptors.retry({

maxRetries: 3,

minTimeout: 1000,

maxTimeout: 10000,

timeoutFactor: 2,

retryAfter: true,

})

)

});

console.log(res.statusCode);

console.log(res.headers);

console.log(await res.body.text());

Why is Fetch() slow?

Undici's request() function typically outperforms fetch() in terms of speed. This is primarily because fetch() utilizes considerable resources to create web streams. If optimizing speed is a priority in your application, undici.request() offers a superior alternative.

It's worth noting that the Node.js team is actively addressing performance issues in fetch(), as evidenced by ongoing improvements seen here.

Wrapping up

When it comes to mastering HTTP functionality in Node.js, Undici is the go-to solution. Built to tackle the evolving challenges of web communication, Undici presents a robust HTTP client library that not only simplifies tasks but also turbocharges performance.

Undici's appeal lies in its streamlined approach and efficient features, which not only improve HTTP operations, but also elevate overall application performance.

With Undici.request, developers gain a hassle-free method to optimize application speed effortlessly. And with Undici.fetch ensuring smooth cross-platform compatibility, developers can seamlessly navigate diverse development environments.

By delving into Undici's advanced mechanisms like pipelining and response handling, developers can unlock unparalleled scalability and responsiveness for their Node.js applications. Whether managing complex network requests or handling high traffic volumes, Undici's user-friendly design and potent capabilities make it an indispensable tool for any Node.js developer looking to enhance their HTTP capabilities.

In the realm of Node.js development, Undici offers a user-friendly and powerful pathway to optimized performance.

Subscribe to my newsletter

Read articles from Matteo Collina directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by