How LinkedIn Improved Latency by 60%: A Deep Dive into Their Serialisation Strategy

UJJWAL BALAJI

UJJWAL BALAJI

In the world of large-scale applications, even the smallest optimisations can lead to significant improvements in performance. LinkedIn, one of the largest professional networking platforms, recently achieved a 60% reduction in latency by making a strategic change in their data serialisation format. This blog post will explore the challenges they faced, the solution they implemented, and the impact it had on their system.

The Problem: JSON Inefficiencies at Scale

LinkedIn’s architecture is built on a micro-services framework called Rest.li, which they developed and open-sourced. With over 50,000 endpoints in production, the platform handles an enormous amount of data traffic. To facilitate communication between these micro-services, LinkedIn initially used JSON (JavaScript Object Notation) as the serialisation format.

While JSON is a widely adopted format due to its readability and language-agnostic nature, it has several inefficiencies that become apparent at scale:

Verbosity: JSON is a text-based format, which means it requires more bytes to represent data. For example, transmitting an integer like

123456789in JSON takes 9 bytes, whereas the same integer could be represented in 4 bytes using binary encoding.Inefficient Parsing: JSON requires the entire document to be loaded into memory before it can be parsed. This makes incremental parsing difficult and resource-intensive.

Network Bandwidth Consumption: The larger payload sizes of JSON consume more network bandwidth, which can lead to increased latency, especially when transmitting large amounts of data.

Compression Overhead: While compressing JSON (e.g., using Gzip or Snappy) can reduce payload size, compression itself is not free. It requires additional CPU cycles and memory, which can offset some of the benefits.

These inefficiencies made JSON a bottleneck for LinkedIn, especially in performance-critical use cases.

The Solution: Switching to Protocol Buffers

To address these challenges, LinkedIn decided to replace JSON with Google Protocol Buffers (Protobuf) as their serialisation format. Protobuf is a binary format that is compact, efficient, and highly performant. Here’s why Protobuf was a better fit for LinkedIn’s needs:

Compact Payloads: Protobuf encodes data in a binary format, which is significantly smaller than JSON. This reduces the amount of data transmitted over the network, conserving bandwidth and reducing latency.

Efficient Serialisation and Deserialisation: Protobuf is designed for fast serialisation and deserialisation. Unlike JSON, it doesn’t require the entire document to be loaded into memory before parsing, enabling incremental parsing and reducing resource consumption.

Language Support: Protobuf has broad support across programming languages, making it a versatile choice for LinkedIn’s polyglot micro-services environment.

The Rollout Strategy

Switching serialisation formats in a production environment as large as LinkedIn’s is no small feat. Here’s how they managed the rollout:

Adding Protobuf Support to Rest.li: LinkedIn updated their Rest.li framework to include support for Protobuf as a serialisation format. This change was rolled out to all services using Rest.li by simply upgrading their framework version.

Gradual Rollout: Instead of forcing all services to switch to Protobuf immediately, LinkedIn adopted a gradual rollout strategy. Clients could choose to send data in Protobuf format by setting a specific Content-Type header (

application/x-protobuf2). Servers would then decode the data using Protobuf instead of JSON.Monitoring and Rollback Plan: Throughout the rollout, LinkedIn closely monitored the performance impact and ensured they had a rollback plan in place. If any issues arose, they could easily revert to JSON without disrupting the system.

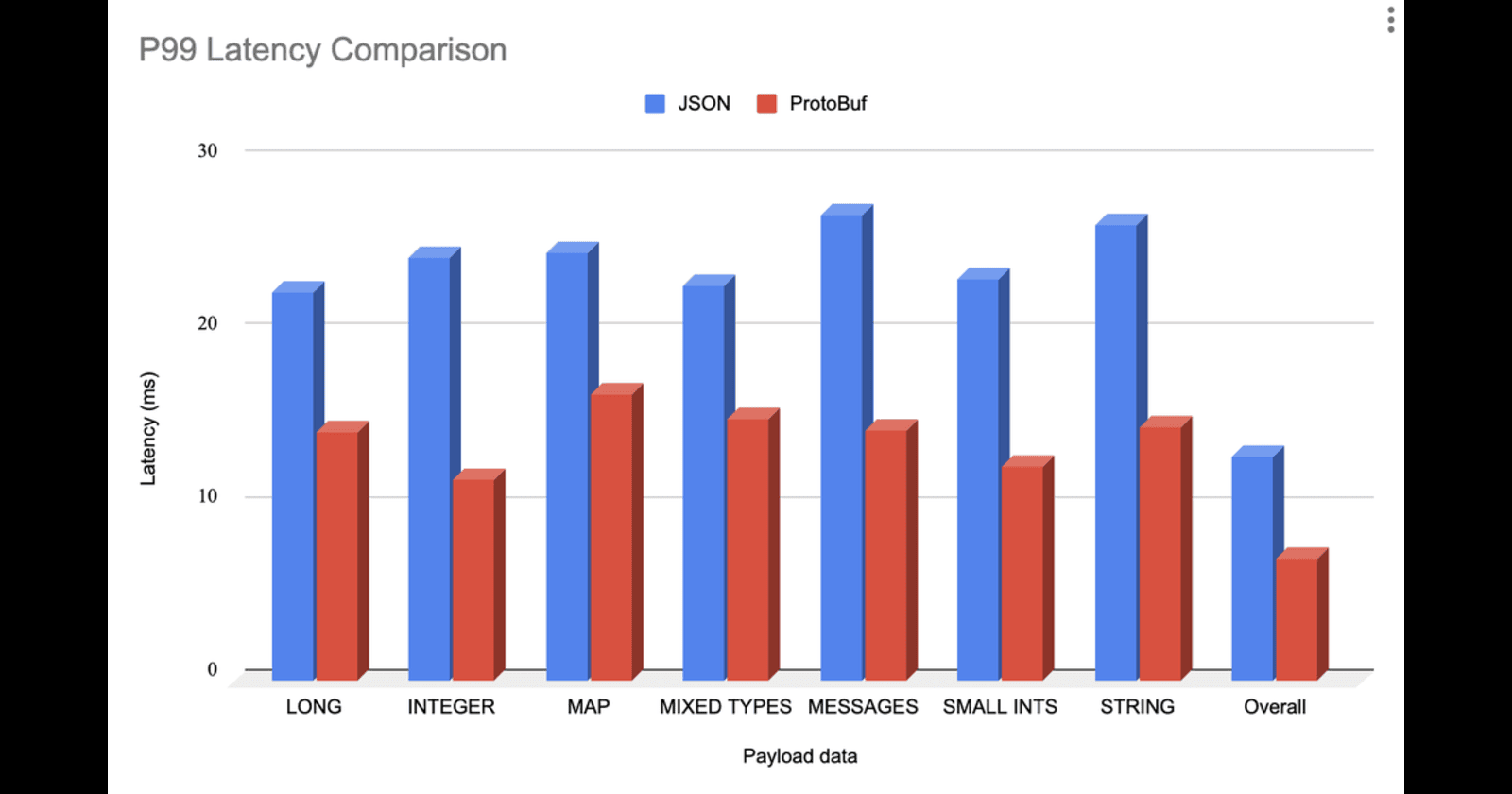

The Impact: 60% Reduction in Latency

The results of this change were staggering. By switching from JSON to Protobuf, LinkedIn achieved a 60% reduction in latency. This improvement was primarily due to:

Reduced Payload Size: Smaller payloads meant less data to transmit over the network, reducing transmission time.

Faster Serialisation and Deserialisation: Protobuf’s efficient encoding and decoding processes minimised CPU and memory usage, improving overall throughput.

Lower Network Bandwidth Consumption: With fewer bytes being transmitted, LinkedIn conserved network bandwidth, which is crucial at their scale.

Key Takeaways

Serialisation Formats Matter: The choice of serialisation format can have a significant impact on performance, especially at scale. JSON, while convenient, may not always be the best choice for high-performance systems.

Protobuf is Not Just for gRPC: While Protobuf is often associated with gRPC, it can be used independently as a serialisation format. LinkedIn’s success demonstrates that Protobuf can deliver substantial benefits even in traditional REST-based systems.

Gradual Rollouts are Key: When making significant changes to a production system, a gradual rollout strategy with monitoring and rollback plans is essential to minimise risk.

Plan for the Future: LinkedIn has hinted at adopting gRPC in the future, which will allow them to leverage additional features like HTTP/2 and further optimise their system.

Conclusion

LinkedIn’s decision to switch from JSON to Protobuf is a testament to the importance of performance optimisation in large-scale systems. By addressing inefficiencies in their serialisation format, they were able to achieve a 60% reduction in latency, significantly improving user experience. This case study serves as a valuable lesson for engineers and architects designing high-performance systems: sometimes, the smallest changes can yield the biggest results.

If you’re interested in learning more, check out LinkedIn’s official blog post on this topic.

Subscribe to my newsletter

Read articles from UJJWAL BALAJI directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

UJJWAL BALAJI

UJJWAL BALAJI

I'm a 2024 graduate from SRM University, Sonepat, Delhi-NCR with a degree in Computer Science and Engineering (CSE), specializing in Artificial Intelligence and Data Science. I'm passionate about applying AI and data-driven techniques to solve real-world problems. Currently, I'm exploring opportunities in AI, NLP, and Machine Learning, while honing my skills through various full stack projects and contributions.