What Is Emit Strategy in Data Streaming

Community Contribution

Community Contribution

Emit strategy in data streaming refers to the rules that control when a system shares processed results. Some systems send new outputs every time the data changes. Others wait until a specific window closes before emitting results. Choosing the right emit strategy helps teams balance the need for fresh information with the volume of updates. Think of it like a scoreboard—some show every point as it happens, while others only update at the end of the game.

Key Takeaways

Emit strategy controls when streaming systems share processed data, balancing fresh insights with manageable update volumes.

Choosing the right emit strategy improves data freshness, system performance, and supports real-time analysis for timely decision-making.

Common emit strategies include emitting on every update, emitting after a time or count window closes, and event-based triggers for immediate reactions.

Hybrid emit strategies combine multiple triggers to adapt to changing data patterns and business needs, offering flexibility and better resource use.

Effective emit strategy implementation requires good state management, fault tolerance, and monitoring to ensure reliable, accurate streaming results.

Why Emit Strategy Matters

Role in Streaming Systems

Emit strategy plays a central role in streaming systems by determining when processed results move downstream. In platforms like Apache Flink and Kafka Streams, tasks ingest streaming data, evaluate patterns, and emit events based on business logic. For example:

In Flink's pattern detection use case, tasks ingest streams of user actions and patterns, evaluate the patterns against the actions, and emit pattern match events downstream. When a new user action arrives, the task checks if it matches the active pattern and emits an event if it does. This demonstrates that the emit strategy is used to output results of stateful computations and pattern evaluations in real-time.

Developers use functions such as out.collect() in Flink to emit alerts when sensor readings cross thresholds. This approach ensures that streaming data analytics deliver actionable insights as soon as conditions are met. The emit strategy enables streaming systems to support real-time analysis, making them essential for applications that require immediate feedback.

Impact on Data Freshness

Emit strategy directly influences the freshness and accuracy of data in real-time data architecture. Systems designed for real-time monitoring, such as cold chain logistics, rely on adaptive communication protocols and distributed sensor nodes to maintain low-latency streaming data transmission. These systems combine multiple data sources and cloud-based processing to ensure that streaming analytics remain both accurate and responsive.

A comparison of different emission update strategies in monitoring applications highlights the importance of integrating multiple data sources:

| Strategy | Data Sources Used | Accuracy Characteristics | Timeliness Characteristics | Key Limitations |

| STAT | Monthly statistics only | Tends to overestimate emissions; median relative error ~3.6% monthly; errors accumulate over longer intervals (up to ~6% after 3 years) | Provides more frequent updates but with accumulating bias over time | Overestimation accumulates; less reliable for long-term updates |

| BULL | Annual bulletins only | Stable accuracy with median relative error ~-0.7% monthly; robust over years; but some extreme outliers during sudden events (e.g., COVID-19) | Less timely; cannot capture immediate impacts of sudden events or monthly fluctuations | Fails to reflect sudden social disruptions; limited monthly resolution |

| COMB | Combination of monthly statistics and annual bulletins | Lowest median annual error (~1.3%); better captures monthly fluctuations; reduces extreme outliers | Dynamically uses monthly data when bulletins delayed; balances timeliness and accuracy; prioritizes bulletins for longer intervals | Slight biases similar to STAT for short intervals; still some discrepancies in emission trend estimation |

Systems that use a combination of monthly and annual data sources achieve better accuracy and timeliness in streaming data analytics. This approach reduces errors and improves responsiveness, especially in sectors where precise real-time indicators are lacking. The design of the emit strategy in a real-time data architecture determines how quickly and accurately streaming data reflects changes in the environment.

Latency and Output Volume

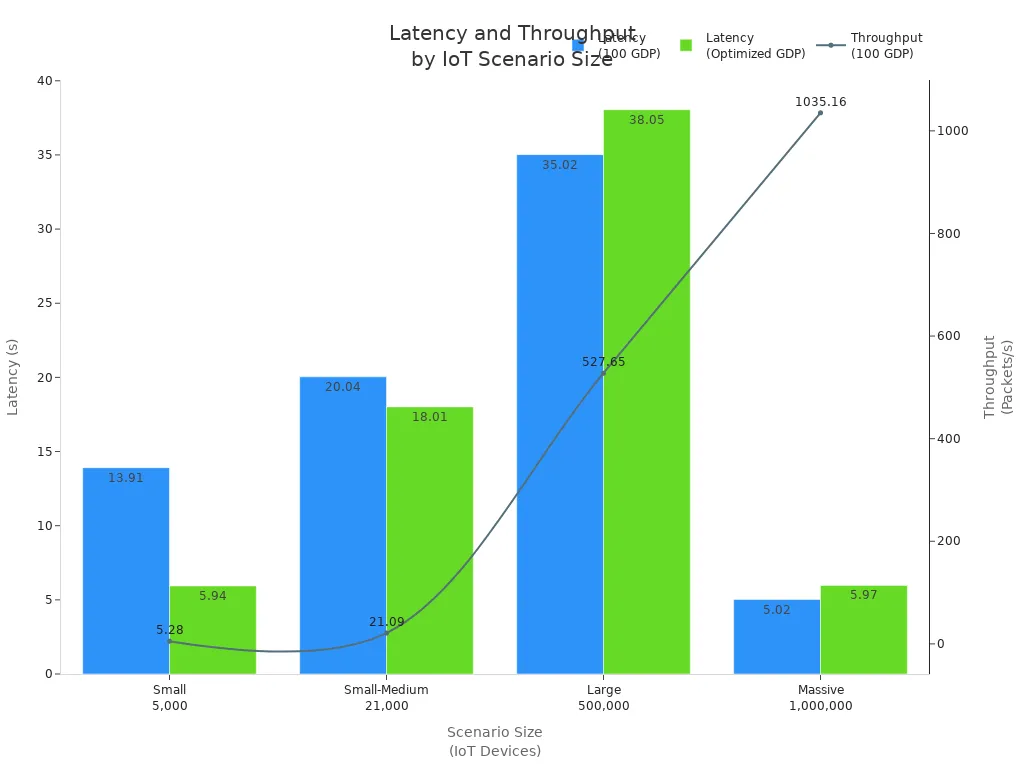

Emit strategy also affects latency and output volume in large-scale streaming deployments. The frequency and timing of data emission impact how quickly users receive updates and how much data the system must process. In IoT networks, tuning block time and gateway buffer limits can optimize both latency and throughput.

The chart above shows that lower block times and optimized buffer sizes reduce latency, especially in smaller and medium-sized scenarios. Throughput scales with the number of devices, but remains consistent across different block times and buffer sizes. Batching multiple streaming data packets into single transactions improves throughput and reduces network overhead.

Selecting an emit strategy involves trade-offs between real-time insights and increased data volume. Real-time data platforms provide immediate consumer insights, enhancing analytics and marketing effectiveness. However, increased data volume raises concerns about privacy, data management complexity, and dependency on third-party providers. Organizations must balance the benefits of timely streaming data with the risks and limitations associated with higher output volume.

Real-time data platforms deliver instant insights for analytics, improving fraud detection and attribution.

Increased data volume enables multinational corporations to analyze consumer patterns across markets and optimize strategies.

The trade-offs include privacy concerns, data management challenges, and uncertainty about future data sharing policies.

Emit strategy shapes the timeliness, frequency, and volume of streaming data output. By carefully designing the emit strategy, teams can optimize real-time analysis, maintain data freshness, and manage system performance in streaming environments.

How Emit Strategy Works

Emitting Data on Update

Many stream processing engines support an emit on update approach, where the system outputs new results every time incoming streaming data changes the computation. This method prioritizes low latency and immediate feedback, making it ideal for scenarios that demand real-time insights. The technical workflow for emitting data on every update involves several key steps:

Configure the streaming engine to use output modes such as 'append', 'complete', or 'update', depending on the requirements. For instance, append mode continuously adds new records, while update mode modifies existing ones.

Set checkpoint locations to guarantee fault tolerance and exactly-once processing, ensuring that no data is lost or duplicated.

For complex operations like upserts, use batch processing methods combined with merge statements to maintain idempotency.

Control the size of micro-batches and the rate of incoming data using parameters like maxFilesPerTrigger, which helps manage system resources and latency.

Enable event time ordering and define watermarks to handle out-of-order events, especially during initial data snapshots.

Monitor streaming query metrics to track the rate of data ingestion and processing progress.

For use cases with less stringent latency requirements, schedule updates using one-time triggers.

By following these steps, organizations can implement an emit on update strategy that delivers fresh streaming data with minimal delay, supporting use cases such as fraud detection, live dashboards, and sensor monitoring.

Emitting Data on Window Close

The emit on window close strategy waits until a defined window of streaming data has ended before emitting results. This approach ensures that the output reflects all relevant events within the window, providing a complete and accurate picture. The following table compares the characteristics of emitting only at window close versus continuous emission:

| Aspect | Emitting Only at Window Close (.final()) | Emitting Continuously (.current()) |

| Accuracy | Results reflect complete and final aggregation of all events in the window, ensuring correctness especially with out-of-order events. | Provides intermediate, partial results that may change as more data arrives, potentially less accurate until window closes. |

| Latency | Introduces latency because results are delayed until the window expires and closes. | Provides lower latency with near real-time updates. |

| Complexity | Requires handling of late or out-of-order events with mechanisms like grace periods, adding complexity. | Simpler in terms of late data handling but may produce fluctuating results. |

| Use Case Suitability | Suitable for scenarios where accuracy and completeness are critical. | Suitable for scenarios requiring real-time or near-real-time responsiveness. |

Streaming systems use watermarks to determine when a window is considered closed. The engine maintains state for each window until the watermark and any configured grace period expire. This ensures that all relevant streaming data, including late arrivals, contribute to the final results. After the window closes, the system cleans up the state to prevent memory leaks. Some engines, such as Quix Streams and RisingWave, allow configuration of grace periods and lateness thresholds, enabling a balance between accuracy and latency. The emit on window close strategy is essential for applications like financial reporting, compliance monitoring, and batch analytics, where completeness and correctness outweigh the need for instant updates.

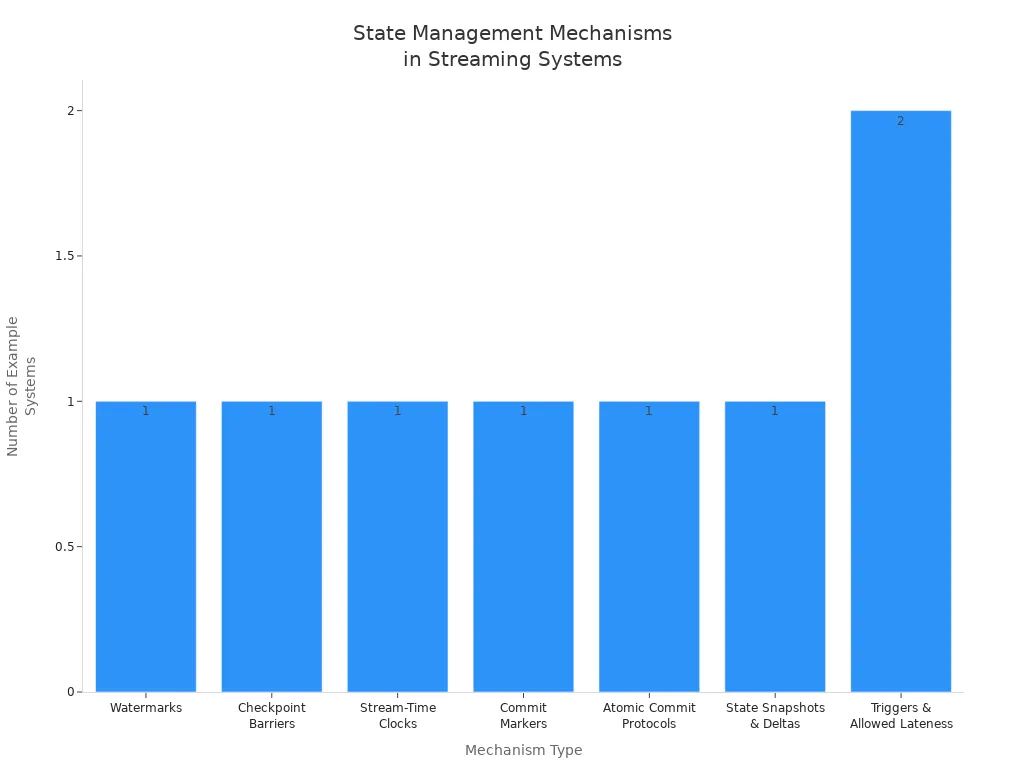

State Management in Streaming

Effective state management underpins both emit on update and emit on window close strategies. Streaming systems must track intermediate computations, manage late data, and ensure reliable output. Several mechanisms support robust state management:

Full state snapshots establish baselines but are used sparingly due to their resource intensity.

Incremental state updates (deltas) minimize data transfer and support efficient patching.

Structured state objects enable partial updates, reducing the complexity of state changes.

Conflict resolution strategies address concurrent updates in distributed environments.

Error recovery mechanisms resynchronize inconsistent states after failures.

Watermarks define the latest event time expected for a window, helping identify late data.

Allowed lateness settings keep windows open beyond watermark passage, incorporating late arrivals.

Triggers fire based on watermark progression or late data arrival, emitting partial or updated results.

State retention policies maintain window state until lateness periods expire, balancing resource use and accuracy.

Side outputs route late data to separate pipelines for logging or reprocessing.

Proper state management directly impacts the reliability and consistency of emitted results. Misconfigured watermarks or checkpoint intervals can lead to incorrect, duplicate, or inconsistent streaming data. Distributed streaming platforms like Apache Spark and Flink rely on checkpointing, replication, and runtime-aware scheduling to maintain consistent state and recover efficiently from faults. Adaptive checkpoint strategies reduce overhead and recovery latency, ensuring that the streaming engine delivers reliable results even under heavy load or failure conditions.

Types of Emit Strategies

Time-Based Emit Strategy

Time-based emit strategies release streaming results at fixed intervals, such as every minute or hour. This approach works well for dashboards and periodic reporting, where users expect regular updates. The system collects data within each time window and emits results when the window closes. Many organizations use emit on window close to ensure that each output contains all relevant data for the interval. This method provides predictable latency and simplifies downstream processing, but it may delay insights if critical events occur just after a window closes.

Count-Based Emit Strategy

Count-based emit strategies trigger output after processing a specific number of streaming records. For example, a system might emit results every 1,000 data points. This approach suits scenarios where the volume of data, rather than time, determines when to share results. Emit on window close in count-based strategies ensures that each batch contains a consistent number of records, which helps with load balancing and resource planning. However, if data arrives slowly, users may experience delays in receiving updates.

Event-Based Emit Strategy

Event-based emit strategies react to specific events or conditions in the streaming data. The system emits results immediately when a defined trigger occurs, such as a threshold breach or a pattern match. This approach enables real-time reaction and proactive measures, especially in high-velocity environments like IoT, financial trading, fraud detection, and network monitoring. Event-based strategies handle irregular event arrival patterns by allowing each device or component to remain inactive until an event occurs. When triggered, the device processes the event and emits results independently, supporting asynchronous and scalable streaming. This design accommodates unpredictable data flows and minimizes latency, making it ideal for operational analytics, real-time personalization, and scenarios where immediate data freshness is critical.

Event-based emit strategies reduce load on source systems and support multiple consumers by decoupling data producers from consumers. They also enable flexible, scalable, and durable processing using message queues and serverless functions.

Hybrid Approaches

Hybrid emit strategies combine time, count, and event triggers to maximize flexibility in streaming systems. For example, a system might emit results every five minutes, after every 500 records, or when a critical event occurs—whichever comes first. This approach allows organizations to balance the benefits of each strategy, adapting to changing data patterns and business needs. Hybrid strategies offer greater flexibility, improved engagement, and broader reach, but they introduce complexity in technology management and inclusivity. The table below summarizes the main benefits and challenges:

| Benefits | Challenges |

| Flexibility in managing triggers and schedules | Managing complex event technology and tools |

| Broader reach and higher accessibility | Handling time zones and technical issues |

| Cost efficiency and environmental sustainability | Ensuring inclusivity and seamless integration |

Hybrid emit strategies help organizations optimize streaming performance, maintain data freshness, and ensure timely insights, even as data patterns shift.

Choosing an Emit Strategy

Use Case Considerations

Selecting the right emit strategy starts with a clear understanding of the application’s requirements. Teams should evaluate the type of streaming protocol needed, such as WebSockets, SSE, or gRPC, based on compatibility and bidirectional communication needs. Backend services benefit from event-driven designs, which promote loose coupling and scalability. Stateless processing often simplifies scaling, but some analytics use cases require careful state management. Versioning helps maintain backward compatibility as systems evolve. Choosing robust message brokers like Kafka or RabbitMQ ensures reliable event persistence and fan-out. Error handling strategies—such as retries, heartbeats, and backpressure—protect system stability. API gateways add layers of authentication, rate limiting, and observability, which are essential for secure and transparent data flows.

Teams should also consider event ordering, stream partitioning, and schema enforcement. Fact event streams, which contain a single event type, support easier state transfer and reduce complexity for consumers. Tools like Flink SQL or ksqlDB help enforce schema and split or join streams to fit analytics needs.

Data Volume vs. Latency

Balancing data volume and latency is critical in real-time analytics. High data volumes demand scalable, distributed architectures to maintain throughput. Low latency requires minimizing data hops and selecting delivery guarantees that avoid excessive processing overhead. Emit strategies must align with business latency tolerance—sub-second for critical insights or a few seconds for dashboards. Delivery guarantees, such as at-least-once or exactly-once, affect both accuracy and performance. Techniques like event streaming offer scalability but require careful schema and ordering management. Hot data storage supports scenarios needing immediate analytics, such as monitoring and cybersecurity, by enabling rapid detection and response.

Partitioning workloads and minimizing data hops reduce latency.

Monitoring and benchmarking prevent performance degradation as data volume grows.

Real-time pipelines should handle traffic spikes with buffering and auto-scaling.

Implementation Tips

Optimizing emit strategy in distributed streaming systems involves several best practices:

Use asynchronous processing so services can emit events and continue working, improving responsiveness.

Employ event producers and consumers for decoupled communication.

Utilize event buses or brokers for efficient event publishing and routing.

Apply architectural patterns like CQRS and Saga to manage scalability and distributed transactions.

Store state changes as a sequence of events for replay and recovery.

Design for fault tolerance by persisting events in durable logs.

Ensure idempotency in event handling to avoid side effects from repeated events.

Maintain event ordering with partitioning strategies.

Incorporate monitoring and distributed tracing tools to track event flows and diagnose issues.

| Common Pitfalls in Emit Strategy Implementation | Impact on Data Integrity | Impact on System Reliability |

| Poor Data Quality (errors, inconsistencies) | Leads to inaccurate, incomplete, or unreliable data | Causes operational inefficiencies and increased IT costs |

| Lack of Integration | Data silos and incompatible systems hinder unified data views | Limits agility and increases maintenance complexity |

| Human Errors | Data entry mistakes cause corrupted or inconsistent data | Requires additional support and error correction efforts |

Teams should prioritize data governance, standardization, and robust controls to ensure reliable analytics and trustworthy data output.

Emit strategy shapes how streaming systems deliver insights and manage resources. Teams must select the right approach for each use case, considering the need for timely data and system reliability.

Retaining every trace can overwhelm resources, while sampling methods help control data volume and maintain performance.

Capturing error traces and business-critical endpoints ensures prompt issue resolution and thorough investigation.

Staying informed about new tools and best practices allows organizations to optimize data streaming as technology evolves.

FAQ

What is the main benefit of using an emit on update strategy?

Emit on update provides immediate feedback. Teams can detect issues or trends as soon as data changes. This approach supports real-time dashboards and alerts, making it ideal for monitoring and rapid response scenarios.

When should a team choose emit on window close?

A team should select emit on window close when accuracy and completeness matter most. This strategy ensures that all relevant data within a window gets processed before results are shared. Financial reporting and compliance monitoring often require this approach.

How does emit strategy affect system performance?

Emit strategy impacts both system load and responsiveness. Frequent emissions increase data volume and processing demands. Less frequent emissions reduce system strain but may delay insights. Teams must balance performance with the need for timely information.

Can emit strategies be combined in one system?

Yes, hybrid emit strategies allow teams to mix time, count, and event-based triggers. This flexibility helps organizations adapt to changing data patterns and business needs. Hybrid approaches can improve both responsiveness and resource efficiency.

What happens if late data arrives after a window closes?

Most streaming systems use grace periods or side outputs to handle late data. The system can update results, log late arrivals, or send them to a separate process for further analysis. This ensures data integrity and minimizes loss.

Subscribe to my newsletter

Read articles from Community Contribution directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by